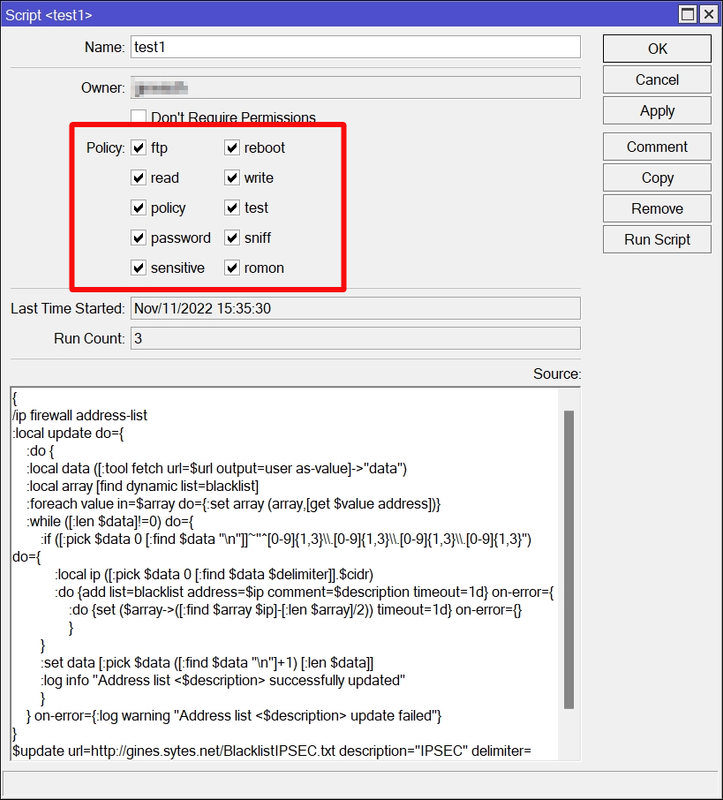

The new parameter "output=user" provided new scripting capabilities that I decided to take full advantage of.

- the script does not need third-party servers, since address lists are downloaded directly from the source and processed directly on the router.

- the script does NOT save the downloaded files to the disk (thereby preventing premature wear and failure of the disk).

- the script can be adapted to download and process any number of address lists of a similar format (the maximum file size is 63 KiB (64512 bytes). It is better than 4 KiB).

At the moment the script can download and update next lists:

- DShield

- Spamhaus DROP

- Spamhaus EDROP

- Abuse.ch SSLBL

Variant 1:

ip firewall address-list

:local update do={

:do {

:local data ([:tool fetch url=$url output=user as-value]->"data")

remove [find list=blacklist comment=$description]

:while ([:len $data]!=0) do={

:if ([:pick $data 0 [:find $data "\n"]]~"^[0-9]{1,3}\\.[0-9]{1,3}\\.[0-9]{1,3}\\.[0-9]{1,3}") do={

:do {add list=blacklist address=([:pick $data 0 [:find $data $delimiter]].$cidr) comment=$description timeout=1d} on-error={}

}

:set data [:pick $data ([:find $data "\n"]+1) [:len $data]]

}

} on-error={:log warning "Address list <$description> update failed"}

}

$update url=https://www.dshield.org/block.txt description=DShield delimiter=("\t") cidr=/24

$update url=https://www.spamhaus.org/drop/drop.txt description="Spamhaus DROP" delimiter=("\_")

$update url=https://www.spamhaus.org/drop/edrop.txt description="Spamhaus EDROP" delimiter=("\_")

$update url=https://sslbl.abuse.ch/blacklist/sslipblacklist.txt description="Abuse.ch SSLBL" delimiter=("\r")Variant 2:

ip firewall address-list

:local update do={

:do {

:local data ([:tool fetch url=$url output=user as-value]->"data")

:local array [find dynamic list=blacklist]

:foreach value in=$array do={:set array (array,[get $value address])}

:while ([:len $data]!=0) do={

:if ([:pick $data 0 [:find $data "\n"]]~"^[0-9]{1,3}\\.[0-9]{1,3}\\.[0-9]{1,3}\\.[0-9]{1,3}") do={

:local ip ([:pick $data 0 [:find $data $delimiter]].$cidr)

:do {add list=blacklist address=$ip comment=$description timeout=1d} on-error={

:do {set ($array->([:find $array $ip]-[:len $array]/2)) timeout=1d} on-error={}

}

}

:set data [:pick $data ([:find $data "\n"]+1) [:len $data]]

}

} on-error={:log warning "Address list <$description> update failed"}

}

$update url=https://www.dshield.org/block.txt description=DShield delimiter=("\t") cidr=/24

$update url=https://www.spamhaus.org/drop/drop.txt description="Spamhaus DROP" delimiter=("\_")

$update url=https://www.spamhaus.org/drop/edrop.txt description="Spamhaus EDROP" delimiter=("\_")

$update url=https://sslbl.abuse.ch/blacklist/sslipblacklist.txt description="Abuse.ch SSLBL" delimiter=("\r")Why is the script using an "array"?

Because the default "find" function is VERY slow. Using an additional array allows to speed up the script several times, since operations are performed directly with the indexes, bypassing the default "find" function.

Required policy: read, write, test.

Perhaps this script will be useful to someone.

P.S. Sorry for my English.