New exciting features for storage

Those of you who are testing 7.18 might have noticed, but most of you probably did not notice yet.

There are plenty of new features in one specific category - storage.

Those of you with devices that support any kind of external storage, like the RB1100, X86, CHR systems or even devices with USB hubs with external drives attached, it would be great if you could test things like:

In default package: https://help.mikrotik.com/docs/spaces/R ... 3346/Disks

- Disk formatting and partitions

- Storage on RAM (tmpFS) when you don't have storage space, but have free RAM

- Swap

- Mount file as block device

In ROSE package https://help.mikrotik.com/docs/spaces/R ... SE-storage

- RAMdisk (slightly different from tmpFS)

- Mounting SMB or NFS onto your router from some NAS and storing files there

- RAID

- BtrFS https://help.mikrotik.com/docs/spaces/R ... 9711/Btrfs

and more

Why are all these features there? Well, soon you will find out, but if you can test some of them, that would help, thanks

There are plenty of new features in one specific category - storage.

Those of you with devices that support any kind of external storage, like the RB1100, X86, CHR systems or even devices with USB hubs with external drives attached, it would be great if you could test things like:

In default package: https://help.mikrotik.com/docs/spaces/R ... 3346/Disks

- Disk formatting and partitions

- Storage on RAM (tmpFS) when you don't have storage space, but have free RAM

- Swap

- Mount file as block device

In ROSE package https://help.mikrotik.com/docs/spaces/R ... SE-storage

- RAMdisk (slightly different from tmpFS)

- Mounting SMB or NFS onto your router from some NAS and storing files there

- RAID

- BtrFS https://help.mikrotik.com/docs/spaces/R ... 9711/Btrfs

and more

Why are all these features there? Well, soon you will find out, but if you can test some of them, that would help, thanks

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

I want to share my feedback about SMB client (/disk add type=smb ...) and about working with large amount of files.

After connecting a new SMB share with about 50 files, a file tree is loaded pretty quickly, all files are visible in WinBox, but then RouterOS starts doing something weird. It creates a huge load on a network and eats a lot of traffic from SMB server. Within about 30 minutes it has downloaded about 4.5 GB of traffic from the server. And this never stops until you dismount your SMB disk. What the hell is it doing? (TILE, 7.18beta4)

On the Dude graph it looks like this:

. --------------------------------------------------------------------------------------------------------------------------------------------------

Regarding woking with large amount of files, I often hear that you are improving the behavior for this scenario. But it will never work normally with large amount of files in current implementation. The reason is simple: RouterOS scans a whole file structure completely. If you have 10K files, 100K files, it will uselessly scan all these thousands of files, creating an unneeded load on network and server. And this process will begin over and over again after each reboot.

To work with large amount of files, file management mechanism should be completely revised:

1. RouterOS should never scan a whole file structure, only content of root directories on the disks.

2. In WinBox, 'File List' should be replaced by 'File Browser' with ability to navigate between directories like it's done in any OS.

3. CLI file commands should also change their behavior. If you type /file print, it should show the contents of root directory only. If you need to show the contents of some subdirectory, you may specify it in 'where' parameter like /file print where name~"subdir/". Sometimes, it may required to show the contents including subdirecories, in such case an additional parameter could be added, like "/file print include-subdirs" for example.

In Linux there is an 'ls' command, so it shouldn't be a problem to translate file commands into 'ls' command accordingly.

It may sound wild for those who get used to current behavior, but without this, any adequate behavior with large amount of files is impossible.

--------------------------------------------------------------------------------------------------------------------------------------------------

P.S. It's still unclear if SMB client feature is incuded in ROSE-storage package, because it works even without this package.

After connecting a new SMB share with about 50 files, a file tree is loaded pretty quickly, all files are visible in WinBox, but then RouterOS starts doing something weird. It creates a huge load on a network and eats a lot of traffic from SMB server. Within about 30 minutes it has downloaded about 4.5 GB of traffic from the server. And this never stops until you dismount your SMB disk. What the hell is it doing? (TILE, 7.18beta4)

On the Dude graph it looks like this:

. --------------------------------------------------------------------------------------------------------------------------------------------------

Regarding woking with large amount of files, I often hear that you are improving the behavior for this scenario. But it will never work normally with large amount of files in current implementation. The reason is simple: RouterOS scans a whole file structure completely. If you have 10K files, 100K files, it will uselessly scan all these thousands of files, creating an unneeded load on network and server. And this process will begin over and over again after each reboot.

To work with large amount of files, file management mechanism should be completely revised:

1. RouterOS should never scan a whole file structure, only content of root directories on the disks.

2. In WinBox, 'File List' should be replaced by 'File Browser' with ability to navigate between directories like it's done in any OS.

3. CLI file commands should also change their behavior. If you type /file print, it should show the contents of root directory only. If you need to show the contents of some subdirectory, you may specify it in 'where' parameter like /file print where name~"subdir/". Sometimes, it may required to show the contents including subdirecories, in such case an additional parameter could be added, like "/file print include-subdirs" for example.

In Linux there is an 'ls' command, so it shouldn't be a problem to translate file commands into 'ls' command accordingly.

It may sound wild for those who get used to current behavior, but without this, any adequate behavior with large amount of files is impossible.

--------------------------------------------------------------------------------------------------------------------------------------------------

P.S. It's still unclear if SMB client feature is incuded in ROSE-storage package, because it works even without this package.

You do not have the required permissions to view the files attached to this post.

Last edited by teslasystems on Fri Feb 07, 2025 10:53 am, edited 1 time in total.

Re: New exciting features for storage

Which version is this?

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

I have specified it, 7.18beta4Which version is this?

Re: New exciting features for storage

Current situation is a disaster for scripts. I have reported my issue here:

viewtopic.php?t=214071#p1120688

... but did not yet open an issue for support. Looks like the implementation is racy, so all scripts that handle files are randomly crashing.

Sometimes even file is available for find, but remove fails anyway, something like:

viewtopic.php?t=214071#p1120688

... but did not yet open an issue for support. Looks like the implementation is racy, so all scripts that handle files are randomly crashing.

Sometimes even file is available for find, but remove fails anyway, something like:

Code: Select all

/file/remove [ find where name="file/with/path" ];Re: New exciting features for storage

Changelog tells you about how to do it, use the new parameters (not a bug)

*) file - allow printing specific directories via path parameter;

*) file - added "recursive" and "relative" parameters to "/file/print" for use in conjunction with "path" parameter;

*) file - allow printing specific directories via path parameter;

*) file - added "recursive" and "relative" parameters to "/file/print" for use in conjunction with "path" parameter;

Re: New exciting features for storage

Meh, this shows how old (and grumpy) I am getting , personally I don't feel particularly excited, the whole stuff appears to be at the moment more wishful thinking than anything else, and anyway it all sounds to me like, to make the usual automotive comparison:

"Hey! We added round wheels, an exciting new development from the square ones we had till now".

(the triangular ones, one bump less, were tested but abandoned earlier)

"Hey! We added round wheels, an exciting new development from the square ones we had till now".

(the triangular ones, one bump less, were tested but abandoned earlier)

Re: New exciting features for storage

I totally agree eworm.

this sccrenshot was made on a rb5009 with ROS 7.18beta4.

The failed script in the sceenshot downloads FW blocklists, merge them and create list entries.

To do this, some files (size <200kb) are downloaded, stored in "Files" and after creation of the "Address List" in the FW menu these files were deleted.

It runs fine on every ROS before ROS7.18Beta2 and now Beta4.

The script itself is provided by eworm.

(please see at https://git.eworm.de/cgit/routeros-scripts/about/)

Please look at it, thank you!

The failed script in the sceenshot downloads FW blocklists, merge them and create list entries.

To do this, some files (size <200kb) are downloaded, stored in "Files" and after creation of the "Address List" in the FW menu these files were deleted.

It runs fine on every ROS before ROS7.18Beta2 and now Beta4.

The script itself is provided by eworm.

(please see at https://git.eworm.de/cgit/routeros-scripts/about/)

Please look at it, thank you!

You do not have the required permissions to view the files attached to this post.

Re: New exciting features for storage

Wait... What!?Changelog tells you about how to do it, use the new parameters (not a bug)

So find is aware of the file and returns an id. But remove still can not remove it. Does that require a path now, for a unique id?

Code: Select all

:local FileID [ /file/find where name="file/with/path" ];

/file/remove path="file/with" $FileID;That's bullshit, no?

Please have another look at that.

Re: New exciting features for storage

We can't replicate what you are saying, but actually it would be better and more correct to do it this way: and not

Code: Select all

/file/remove file/with/pathCode: Select all

/file/remove [ /file/find here name="file/with/path" ]Re: New exciting features for storage

All that fails the same way. Not every time, but way too often.

As this is racy you need to remove a file that's not too old. Perhaps I should open an issue and provide more details... The problem is that behavior on racy things changes all the time, so there is no perfect reproducer.

As this is racy you need to remove a file that's not too old. Perhaps I should open an issue and provide more details... The problem is that behavior on racy things changes all the time, so there is no perfect reproducer.

Re: New exciting features for storage

yes, please make a ticket with details how to replicate, how many files you have, which file you removed etc.

Re: New exciting features for storage

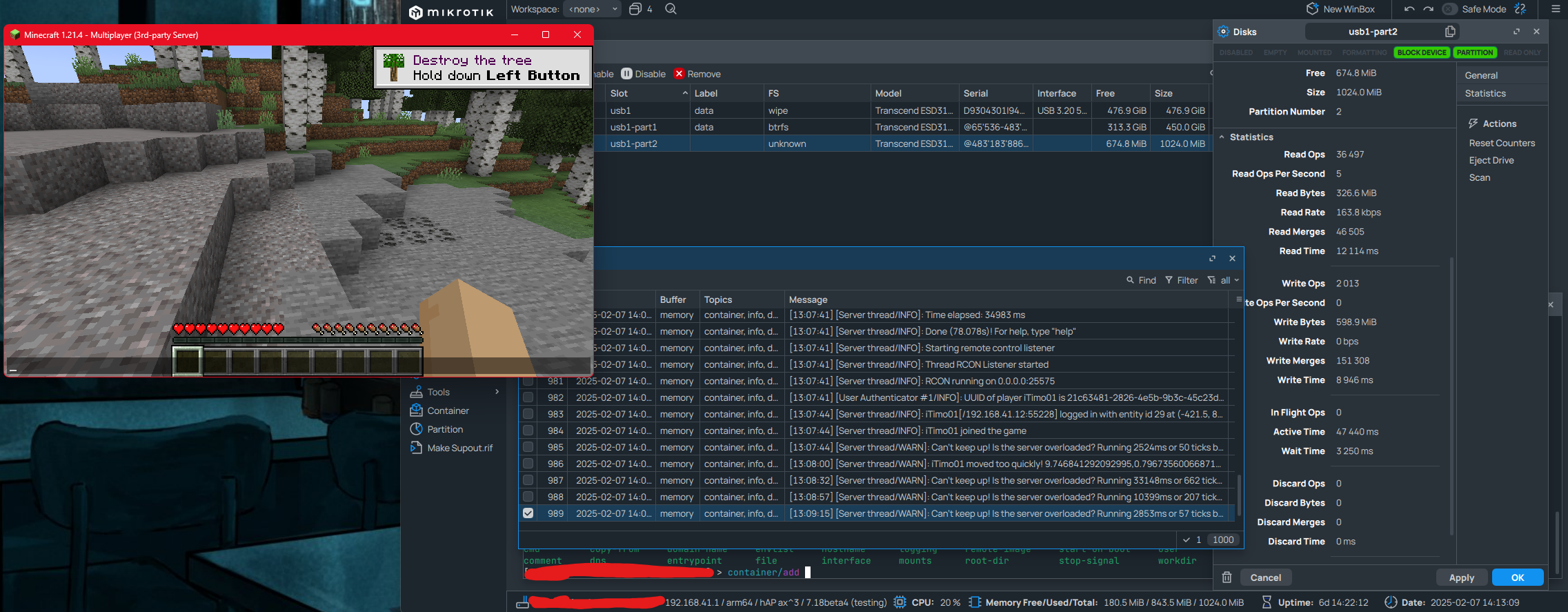

Swap appears to be working great since I'm now able to spin up a minecraft server on my ax3 :D- Swap

While previously trying to get it working, the router would crash with an OOM condition.

Code: Select all

container/envs/add key=EULA name=mcsrv value=TRUE

container/envs/add key=MEMORY name=mcsrv value=512M

container/add remote-image=itzg/minecraft-server:latest interface=veth1 envlist=mcsrv root-dir=/usb1-part1/containers/store/mcsrv comment=mcsrv logging=yes

container/start [find comment=mcsrv]

ip/firewall/nat/add action=dst-nat chain=dstnat disabled=yes dst-address=192.168.41.1 dst-port=25565 protocol=tcp to-addresses=172.17.0.2 to-ports=25565

-

-

Valerio5000

Member Candidate

- Posts: 118

- Joined:

Re: New exciting features for storage

I'll take a guess..you want to enter the NAS market? tell the truth :D

Why are all these features there? Well, soon you will find out, but if you can test some of them, that would help, thanks

Anyway it's always nice to see the MK team developing and proposing new features ;)

Re: New exciting features for storage

Exciting features... ? I don't understand why other networks products, forti, xtrem, cisco do like you !

Minecraft on container on network security gateway... it's a joke ?

And during this time, with evolution of routerOS, their more and more constraints about device mode, functions before allowed are now locked and need the physical button press to be changed. When router are far, it's a great feature, for sure ! But it's better to transform routerOS as a NAS, gaming server....

Minecraft on container on network security gateway... it's a joke ?

And during this time, with evolution of routerOS, their more and more constraints about device mode, functions before allowed are now locked and need the physical button press to be changed. When router are far, it's a great feature, for sure ! But it's better to transform routerOS as a NAS, gaming server....

Re: New exciting features for storage

Who said you have to run Minecraft? One forum user did it for testing?

You can run Greylog or Grafana.

You can run Greylog or Grafana.

Re: New exciting features for storage

Yes, and you can definitely run more useful things on a router if you so desire.Minecraft on container on network security gateway... it's a joke ?

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

Don't want to sound rude and sorry for offtopic, but having hardware on remote location without having additional devices (like GSM-sockets or something similar) that allow to remotely power-cycle your hardware is very strange at least. Anything could happen and power-cycling often helps. In case of device-mode changing it would also solve the problem. So, as to me, having such devices is a mandatory thing and I don't know how to explain when people don't have them. May be they like to drive (or even fly) to their remote sites each time something happens...need the physical button press to be changed. When router are far, it's a great feature, for sure !

Re: New exciting features for storage

This is RouterOS, not StorageOS. If you desire to add file management and make a NAS out of a router then just spinoff ROS into another branch that is developed separately. Storage device does not need BGP and MPLS and router/switch does not need NFS, Btrfs support, RAID, NVMe over TCP or fancy UI for collaborative file sharing. You already have strong base with basic networking, just cut unnecessary slack, integrate rose-storage into base and sell it as separate OS to be ran on new class of devices.

The naming of ROSE is also pretty poor, "RouterOS Enterprise" - 100% enterprise storage, 0% enterprise networking.

---

The naming of ROSE is also pretty poor, "RouterOS Enterprise" - 100% enterprise storage, 0% enterprise networking.

---

I wouldn't dare any more than you would dare to run it on Junos :> Possible? Yes, absolutely! Good idea? In hell.You can run Greylog or Grafana.

Re: New exciting features for storage

I, for one, welcome our Storage overlords.

Those who are poo-pooing things haven't been paying close attention to the hyper-convergence of functions the last decade or two. Cisco built a virtual router for VMware 15 years ago, and VMware created vSAN shortly thereafter. Now you have Proxmox VE + Ceph, and with a CHR (or two) in the mix, or FRR on the bare metal, customers can build (and have) practically anything on commodity hardware.

Look at what TrueNAS has done with TrueNAS Scale. You put the server on a decent PC, spin up your ZFS pool, go download a bunch of free apps, and/or put a VM on the box. MSP's love this kind of stuff.

It looks like MikroTik is trying to get their hardware and software into this market. You can do a barebones PC, or you can buy a beefy router (RB5009, CCR2004, CCR2x16 or CHR + Ampere) with simple storage, and, like with TrueNAS scale, load up apps (micro services) on your router.

When ROSE came out, I put an NVMe to SATA adapter in a 2116, punched a hole, ran some thin SATA cables out, and hooked up six SATA drives in a bay the size of a CDROM. It works pretty well.

The advantage MikroTik has over a PC is wirespeed switching/routing (on Marvell platforms). I run a bunch of containers on my 2116. Going to have to add the Minecraft one (didn't know it existed for ARM64).

Those who are poo-pooing things haven't been paying close attention to the hyper-convergence of functions the last decade or two. Cisco built a virtual router for VMware 15 years ago, and VMware created vSAN shortly thereafter. Now you have Proxmox VE + Ceph, and with a CHR (or two) in the mix, or FRR on the bare metal, customers can build (and have) practically anything on commodity hardware.

Look at what TrueNAS has done with TrueNAS Scale. You put the server on a decent PC, spin up your ZFS pool, go download a bunch of free apps, and/or put a VM on the box. MSP's love this kind of stuff.

It looks like MikroTik is trying to get their hardware and software into this market. You can do a barebones PC, or you can buy a beefy router (RB5009, CCR2004, CCR2x16 or CHR + Ampere) with simple storage, and, like with TrueNAS scale, load up apps (micro services) on your router.

When ROSE came out, I put an NVMe to SATA adapter in a 2116, punched a hole, ran some thin SATA cables out, and hooked up six SATA drives in a bay the size of a CDROM. It works pretty well.

The advantage MikroTik has over a PC is wirespeed switching/routing (on Marvell platforms). I run a bunch of containers on my 2116. Going to have to add the Minecraft one (didn't know it existed for ARM64).

Re: New exciting features for storage

How I see it 'Tik are just making the existing features of the kernel accessible to the user. I welcome that and I do use containers and storage in private and intent to use it in enterprise.

Re: New exciting features for storage

@sirbryan, that’s not gonna happen. ROS is designed as an embedded NOS with its own limitations. When it comes to running ROS as CHR, there are way better options. Plus, MT lacks the skill set and experience, and ROS is too unreliable for storage solutions like hyper-convergence.

Re: New exciting features for storage

I think it makes a great SoHo router though. A couple of SMB shares and SWAP for memory heavy containers is all I need. Finally can retire an rpi and run Homebridge directly on my AX3.

Re: New exciting features for storage

SWAP over USB is a horrible idea and recipe for kernel deadlocks and freezes - definitely not something you want on a device that is also processing your network traffic. Will it work? Yes, and its also likely you won't see any issues... until you hit them hard and waste days debugging it.SWAP for memory heavy containers is all I need

Re: New exciting features for storage

I got an impression that their SWAP implementation is only for containers and otherwise unused (and isolated) from network functionality.

Re: New exciting features for storage

My bet: fast stable shared resource to provide true HA....

Why are all these features there? Well, soon you will find out, but if you can test some of them, that would help, thanks

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

Oh, and regarding this,

viewtopic.php?t=214077

Please read- Storage on RAM (tmpFS) when you don't have storage space, but have free RAM

viewtopic.php?t=214077

Re: New exciting features for storage

Since containers share kernel with host, there is no distinction here. Only consider it for devices which have PCI-E lanes exposed over physical slot (RBM33G does for example, but it's hardly a good base for it anyway because of MMIPS and 16M of storage).I got an impression that their SWAP implementation is only for containers and otherwise unused (and isolated) from network functionality.

Re: New exciting features for storage

Zerotrust cloudflare on a storage device??? ;-PP

-

-

guipoletto

Member Candidate

- Posts: 221

- Joined:

Re: New exciting features for storage

Regarding tmpfs:

i ran a script to create "/tmp" on several production devices:

this should create a ramdisk with "upto" 50% of RAM

it failed on all "64M" devices (RB2011iL, RB2011iLS, RB750r2, RB433)

for these, the default of 50% should probably be reviewed

they accepted up to 30M for tpmfs, even when "free-memory" was around 20-24mb

this leads to another observation:

a Netpower 16P reports 183mb free (out of 256), and also failed to create the ramdisk, with the same message as the 64m ones;

"failure: too much memory requested for tmpfs/ramdisk"

another Netpower 16P with 199mb "free" successfully created the ramdisk with the same parameters

this is weird, as 128mb are clearly free

and also leads to my main question:

when ramdisk is created near boot-time (when ram is mostly free and unfragmented),

what would be the expected behahviour when system-ram usage grows, and the ramdisk already exists with "upto" 50% of ram, but is empty / near-empty?

i ran a script to create "/tmp" on several production devices:

Code: Select all

/disk/add slot=tmp type=tmpfs;it failed on all "64M" devices (RB2011iL, RB2011iLS, RB750r2, RB433)

for these, the default of 50% should probably be reviewed

they accepted up to 30M for tpmfs, even when "free-memory" was around 20-24mb

this leads to another observation:

a Netpower 16P reports 183mb free (out of 256), and also failed to create the ramdisk, with the same message as the 64m ones;

"failure: too much memory requested for tmpfs/ramdisk"

another Netpower 16P with 199mb "free" successfully created the ramdisk with the same parameters

this is weird, as 128mb are clearly free

and also leads to my main question:

when ramdisk is created near boot-time (when ram is mostly free and unfragmented),

what would be the expected behahviour when system-ram usage grows, and the ramdisk already exists with "upto" 50% of ram, but is empty / near-empty?

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

@guipoletto

It seems, that there is some "reserved" memory. My TILE router with 2 GB of RAM, goes to reboot with out-of-memory if free RAM goes down to about 150 MB.

It seems, that there is some "reserved" memory. My TILE router with 2 GB of RAM, goes to reboot with out-of-memory if free RAM goes down to about 150 MB.

Re: New exciting features for storage

Decided against. While this breaks my scripts, I can not find a really good reproducer. This drove me crazy - every time I though I am near it changed behavior. 🤪yes, please make a ticket with details how to replicate, how many files you have, which file you removed etc.

Instead I modified my scripts. I bumped the required version to RouterOS 7.15 - the new code for directory creation (

Code: Select all

/file/add type=directory name=$dir;Code: Select all

/file/add name=$dir/file; /file/add name=$dir/file;The only downside is that stale files may be around... But as I put everything into a tmpfs this is not much of a concern - a reboot does clean up.

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

I 1000% agree with you and echo this. MikroTik, please LISTEN -- Cut this bullshit with storage on RouterOS as a network appliance hardware used for routing. Move it to StorageOS and let it be a fork and then let people test the features.This is RouterOS, not StorageOS. If you desire to add file management and make a NAS out of a router then just spinoff ROS into another branch that is developed separately. Storage device does not need BGP and MPLS and router/switch does not need NFS, Btrfs support, RAID, NVMe over TCP or fancy UI for collaborative file sharing. You already have strong base with basic networking, just cut unnecessary slack, integrate rose-storage into base and sell it as separate OS to be ran on new class of devices.

The naming of ROSE is also pretty poor, "RouterOS Enterprise" - 100% enterprise storage, 0% enterprise networking.

---

I wouldn't dare any more than you would dare to run it on Junos :> Possible? Yes, absolutely! Good idea? In hell.You can run Greylog or Grafana.

Since RouterOS 7.17 with introduction of device-mode and then "ROSE", we're leaving MikroTik -- moving onto greener pastures. This is after 10+ years. Perhaps we have matured, but we cannot risk our company name and customer satisfaction.

Storage protocols do NOT belong on a router. SMB, NFS, NVME drivers, RAID, etc. WTF? Please STOP. We're done -- we will only use MikroTik for 60Ghz connectivity, that is the only current stable wireless product [besides Cube 60 water ingestion]. Perhaps when MikroTik restructures themselves and they also grow up -- we will circle back and become again interested. Focus should be on better web interface, Winbox development, CAPsMAN management [sync], dynamic routing fixes, or cloud management... get with the times. There are better products out there. MikroTik can be so much more in 2025 and stifle competition with better investment within themselves. Perhaps better leadership guidance?

MikroTik releasing "enterprise" hardware for consumer niche market to tinker. We enjoyed MikroTik when we could "route the world" and felt satisfaction.

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

@toxicfusion If you hate storage features so much, just don't install ROSE package and don't use any of these features, no one forces you to use them. And if you moved to other "better" solutions - what's you problem? Leave silently without posting this bullshit.

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

network storage protocols dont belong on a router, or in this case RouterOS -- even if installed as an additional package. "enterprise my ass" [Apple joke]. This would be fine for an open source project - or for kid tinker toys. MikroTik putting "Tik in Tinker" as of lately.

Introducing storage protocols on a router, home lab or dev -- that then becomes someones office production network..... is horrifying. The security risks alone should make this be a completely separate operating system by Mikrotik. Some new IT intern or "Green" IT staff would see this as cost saving measure to do SMB on their MikroTik router and put into production.. scary.

MikroTik fork the project; create your bootable linux MikroTik operating system with basic TCP/IP stack and the ROSE package loaded. Otherwise, people just install real operating system to do the job. TrueNAS these days is child's play - just works. If MikroTik was to fork this project to create MikroTik NAS -- they better hire a better engineer or web designer. Winbox4 or the new Webfig is not going to cut it. Its a sad state.

I get the "appeal" for "all-in-one". But why...... Only makes sense for a hobbyist or home lab..... This serving production workloads or REAL workloads is laughable.

Even if there was a possibility to attach high speed storage [USB-C] via JBOD external enclosure -- there are better solutions.

Introducing storage protocols on a router, home lab or dev -- that then becomes someones office production network..... is horrifying. The security risks alone should make this be a completely separate operating system by Mikrotik. Some new IT intern or "Green" IT staff would see this as cost saving measure to do SMB on their MikroTik router and put into production.. scary.

MikroTik fork the project; create your bootable linux MikroTik operating system with basic TCP/IP stack and the ROSE package loaded. Otherwise, people just install real operating system to do the job. TrueNAS these days is child's play - just works. If MikroTik was to fork this project to create MikroTik NAS -- they better hire a better engineer or web designer. Winbox4 or the new Webfig is not going to cut it. Its a sad state.

I get the "appeal" for "all-in-one". But why...... Only makes sense for a hobbyist or home lab..... This serving production workloads or REAL workloads is laughable.

Even if there was a possibility to attach high speed storage [USB-C] via JBOD external enclosure -- there are better solutions.

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

Further -

I'd MUCH rather see continued development of containers on RouterOS. Other vendors do this, run containers. IE: Cisco has run containers on their hardware with great success. Also ARISTA... etc.

Or look at what Palo Alto does. Their management plane on completely separate partition and CPU [How you interact and commit changes], all else runs on other available CPU's and on the ASICS - data and control plane. Now this would be better investment in resources at MikroTik.

But yet MIkroTiks marketing team loves to "market" some of the CCR hardware as enterprise ready -- IMO there appears to be a shift happening right now with all that is broken and lack of focus. [Winbox4 is a mess, 7.17 device-mode]. We're still griping about having to physically touch customer deployed hardware. If we're having to make customer physically power cycle or we have to truck roll, we will just be replacing with other vendor.

I'd MUCH rather see continued development of containers on RouterOS. Other vendors do this, run containers. IE: Cisco has run containers on their hardware with great success. Also ARISTA... etc.

Or look at what Palo Alto does. Their management plane on completely separate partition and CPU [How you interact and commit changes], all else runs on other available CPU's and on the ASICS - data and control plane. Now this would be better investment in resources at MikroTik.

But yet MIkroTiks marketing team loves to "market" some of the CCR hardware as enterprise ready -- IMO there appears to be a shift happening right now with all that is broken and lack of focus. [Winbox4 is a mess, 7.17 device-mode]. We're still griping about having to physically touch customer deployed hardware. If we're having to make customer physically power cycle or we have to truck roll, we will just be replacing with other vendor.

Last edited by toxicfusion on Tue Feb 11, 2025 8:19 pm, edited 1 time in total.

-

-

guipoletto

Member Candidate

- Posts: 221

- Joined:

Re: New exciting features for storage

So long as these features are modularized, i don't see the problem.network storage protocols dont belong on a router

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

Ok -- but it is a waste of CPU cycles and processing power.... When can instead be used to process packets, and firewall rules. Not all the MikroTik switch chips are that great. As they dont all have real ASICS.

Storage technology/features does not belong on a router. Its for tinker, hobbyist, or homelab. MikroTik is uncertain of their identity. Are you enterprise, or are you home-lab? If you're both -- there needs to be a clear separation in product lines.

Storage technology/features does not belong on a router. Its for tinker, hobbyist, or homelab. MikroTik is uncertain of their identity. Are you enterprise, or are you home-lab? If you're both -- there needs to be a clear separation in product lines.

Re: New exciting features for storage

Why not be the one vendor that fits in home lab _and_ enterprise? For me it was exactly this how they even got noticed by us. First used in home lab, than slowly migrating into enterprise. Give users the choice, you don't need to run those features.

Re: New exciting features for storage

As this still broke with my workarounds in place I digged deeper. I think I have a reproducer now... Please have a look at SUP-179200 for details. Thanks!Decided against. While this breaks my scripts, I can not find a really good reproducer. This drove me crazy - every time I though I am near it changed behavior. 🤪yes, please make a ticket with details how to replicate, how many files you have, which file you removed etc.

Re: New exciting features for storage

We found the issue you describe, it was triggered if the file was in a subdirectory, looks like the next release will have a fix

Re: New exciting features for storage

Eagerly waiting for that then. 😊

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

What about issue with SMB that I desribed at the beginning?

Re: New exciting features for storage

RouterOS file subsystem will soon have improvements for listing large amount of files, but normally you should not be using Winbox as a file manager. Use SMB to browse the files.

I did not fully understand, you also have issues with SMB listing, if Winbox is closed?

I did not fully understand, you also have issues with SMB listing, if Winbox is closed?

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

@normis, Listing is OK. But, it's consuming a lot of traffic even if WinBox is closed. No difference, if it's opened or closed. But even if it's opened, what is it doing? I'm not downloading any files from a share, just connected an SMB disk, it has quickly downloaded a list of 50 files and then it's eating 9 GB per hour for no reason.

Regarding large amount of files, I understand, that WinBox is not a file browser, but what will happen, if you have 100K files? Doesn't matter if it's SMB, or just a USB HDD. RouterOS is collecting a whole file structure and this will happen over and over again after each reboot. This is the key problem for using it with large amount of files.

Regarding large amount of files, I understand, that WinBox is not a file browser, but what will happen, if you have 100K files? Doesn't matter if it's SMB, or just a USB HDD. RouterOS is collecting a whole file structure and this will happen over and over again after each reboot. This is the key problem for using it with large amount of files.

Re: New exciting features for storage

Idea for a useful and interesting feature (which shouldn't be very difficult to implement); when a device has little RAM but enough storage (NAND, SATA, NVME or USB), it would be nice to have a native swap feature (like in standard Linux where a partition/file is used as a swap when RAM runs out)

/system disk add type=swap size=8192 file=/swapfile

I'm thinking of my RB1100 Dude which is rebooting me due to out of memory condition even though I have 256GB SATA SSD and 1GB RAM

PS : Yes, I know, I could migrate to CHR my Dude... but that's not what this post is.

/system disk add type=swap size=8192 file=/swapfile

I'm thinking of my RB1100 Dude which is rebooting me due to out of memory condition even though I have 256GB SATA SSD and 1GB RAM

PS : Yes, I know, I could migrate to CHR my Dude... but that's not what this post is.

Re: New exciting features for storage

The latest beta does have support for swap. 😉

-

-

guipoletto

Member Candidate

- Posts: 221

- Joined:

Re: New exciting features for storage

Swap is evil, and in a ROUTER, largelly indicates device-abuseThe latest beta does have support for swap. 😉

exposing some swap for *containers*, and the applications hosted therein is one thing

if those crash, who cares.

but if the host-system (which is a ROUTER) is forced into swapping ,

then trough device-abuse and feature-creep it's no longer a router and the network topology needs to be reviewed... (or a beefier router with actual RAM needs to be purchased)

Re: New exciting features for storage

That's right. I didn't see this subtle line in changelog 7.18 beta.- However, I'll wait for it to arrive in the stable channel.

Let's hope it also works for system processes (such as the Dude) as this suggests!

Thanks a lot! :)

PS: It's not really the debate whether a swap is good or not- I have a 2 year old device with 1GB of RAM. It was enough for Dude/RouterOS v6... Not enough anymore for RouterOS v7. I'm not going to throw away a $400 device, redundant power supply & with a level 6 license just because it no longer has enough RAM. Swap is a linux kernel feature that should (if possible) be implemented and - at the user's option - should be usable. In my opinion, it's a more interesting feature than DLNA. :)

Let's hope it also works for system processes (such as the Dude) as this suggests!

Thanks a lot! :)

PS: It's not really the debate whether a swap is good or not- I have a 2 year old device with 1GB of RAM. It was enough for Dude/RouterOS v6... Not enough anymore for RouterOS v7. I'm not going to throw away a $400 device, redundant power supply & with a level 6 license just because it no longer has enough RAM. Swap is a linux kernel feature that should (if possible) be implemented and - at the user's option - should be usable. In my opinion, it's a more interesting feature than DLNA. :)

Re: New exciting features for storage

Swap is not for the host system. Please read the manual about it before complaining: https://help.mikrotik.com/docs/spaces/R ... -SwapspaceSwap is evil, and in a ROUTER, largelly indicates device-abuseThe latest beta does have support for swap.

exposing some swap for *containers*, and the applications hosted therein is one thing

if those crash, who cares.

but if the host-system (which is a ROUTER) is forced into swapping ,

then trough device-abuse and feature-creep it's no longer a router and the network topology needs to be reviewed... (or a beefier router with actual RAM needs to be purchased)

-

-

ConradPino

Member

- Posts: 481

- Joined:

- Location: San Francisco Bay

- Contact:

Re: New exciting features for storage

@normis, thank you and please thank Druvis Timma for making that update Feb 07, 2025 10:17. IMO a super addition.Swap is not for the host system. Please read the manual about it before complaining: https://help.mikrotik.com/docs/spaces/R ... -Swapspace

Re: New exciting features for storage

This still happens with RouterOS 7.18beta6. Is that version supposed to have the fix?We found the issue you describe, it was triggered if the file was in a subdirectory, looks like the next release will have a fix

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: New exciting features for storage

@normis,

Seems, I have found, what’s happening with SMB. I’ve captured SMB packets with WireShark and see, that RouterOS is reading the contents of each file (only first 256 KB). And this repeats again and again.

On the graph at the beginning of the topic, each peak is one reading cycle. I.e., RouterOS reads the contents (first 256 KB), then pauses for about 8 seconds; reads again, pauses for 8 seconds. And so on, cyclically. Moreover, it reads each file multiple times within the same cycle, usually 3 times in each cycle.

Thats why it’s consuming so much traffic. It’s not normal.

Duplicated this to SUP-158277.

.

Seems, I have found, what’s happening with SMB. I’ve captured SMB packets with WireShark and see, that RouterOS is reading the contents of each file (only first 256 KB). And this repeats again and again.

On the graph at the beginning of the topic, each peak is one reading cycle. I.e., RouterOS reads the contents (first 256 KB), then pauses for about 8 seconds; reads again, pauses for 8 seconds. And so on, cyclically. Moreover, it reads each file multiple times within the same cycle, usually 3 times in each cycle.

Thats why it’s consuming so much traffic. It’s not normal.

Duplicated this to SUP-158277.

.

You do not have the required permissions to view the files attached to this post.

Re: New exciting features for storage

This is not how is mentioned in documentation:Swap is not for the host system. Please read the manual about it before complaining: https://help.mikrotik.com/docs/spaces/R ... -Swapspace

It stated as "useful when using containers" - ROS will not go OOM if containers consume more memory than is physically available, but is still can confuse reader if for other system processes memory pages are swapped or not.... This is useful when using containers on RouterOS to be able to run containers that require much more RAM than you RouterOS device has. ...

If is really dedicated only for containers, it should be stated like that, I guess then this is implemented with Linux kernel Per-cgroup swap file only for container ROS process.

Re: New exciting features for storage

Please explain how do you achieve separation of SWAP usage between host and containers, given that both share same kernel via namespaces and cgroups. For container to use SWAP this space must be physically present to kernel on host. Similarly, you can not adjust vm.swappiness value for container without affecting host.Swap is not for the host system. Please read the manual about it before complaining: https://help.mikrotik.com/docs/spaces/R ... -Swapspace

I don't see how this would work given ROS documentation since there is no ability to configure hard memory limit for container, just soft limit. There also does not seem to be any way to dedicate specific swapfile to given container, so this would exclude per-cgroup swapfile too. My best guess so far is that any SWAP created is simply attached to host and is shared with containers in same way host memory is.If is really dedicated only for containers, it should be stated like that, I guess then this is implemented with Linux kernel Per-cgroup swap file only for container ROS process.

Re: New exciting features for storage

It will be nice that they explain how did achieve this and state directly that swap is used only for containers. Here is interesting reading for Open Containers regarding containers memory management and comparison between cgroup1 and cgroupv2.

Re: New exciting features for storage

Swap must be explicitly turned on. If you don't like how it affects your host (if it does), just do not turn it on.

Re: New exciting features for storage

Cool stuff!

I'll move all my NAS data over to my router now, and I'll convert my NAS into a working BGP router.

How I didn't think of this before? Genius!

I'll move all my NAS data over to my router now, and I'll convert my NAS into a working BGP router.

How I didn't think of this before? Genius!

-

-

ConradPino

Member

- Posts: 481

- Joined:

- Location: San Francisco Bay

- Contact:

Re: New exciting features for storage

@Cha0s LOL

Re: New exciting features for storage

Better late than never. 😉

Re: New exciting features for storage

https://help.mikrotik.com/docs/spaces/R ... -Swapspace

What's new in 7.18 (2025-Feb-24 10:47):

*) disk - allow to add swap space without container package;

Sorry, it's not clear to me if I can use the SWAP partition only for containers or also with ROS.

I'm asking because I have several hAP ac2 that can't use SMB (router freezes and restarts itself when transfers are large) and containers due to low RAM.

Before changing disk partitions i would like to make sure it is useful...

Thanks

EDIT: I found the answer, Normis wrote:

Swap is not for the host system. Please read the manual about it before complaining: https://help.mikrotik.com/docs/spaces/R ... -Swapspace

Re: New exciting features for storage

https://btrfs.readthedocs.io/en/latest/ ... -practices

RAID56 - at latest btrfs version - is not considered stable for production.

RAID56 - at latest btrfs version - is not considered stable for production.

Re: New exciting features for storage

Even vanilla Btrfs RAID 1 can be a real headache, for example, if a disk intermittently disconnects or fails for some reason and then gets marked as unreliable. Restoring a Btrfs RAID 1 is a pretty complicated process and requires expert knowledge, as @Petch1 pointed out in another thread. In other words, Btrfs RAID 1 is nothing like a normal RAID 1 with automatic resync. There are plenty of examples of this online.

If any kind of Btrfs failure happens with a ROSE Data Server, you’re pretty much out of luck since RouterOS doesn’t provide any Btrfs tools for repair or recovery. On top of that, there’s no proper backup and restore solution, which is absolutely essential for quickly getting back up and running if something goes wrong or if an important file accidentally disappears.

For MT to succeed, it must invest significantly more effort into developing a robust software solution to make the RDS2216 a viable storage server for business-critical use. But since this isn’t Mikrotik’s area of expertise, and building a storage server on 'standard' RouterOS is fundamentally flawed, the whole approach seems pointless.

To be bluntly honest, I think MT should either kill the data server product line or spin off the business into a completely separate and independent subsidiary.

The RDS2216 hardware looks great though.

Refs:

viewtopic.php?p=1129645#p1129506

viewtopic.php?p=1129645#p1129574

viewtopic.php?p=1129657#p1129601

viewtopic.php?p=1129657#p1129614

viewtopic.php?p=1129657#p1129633

If any kind of Btrfs failure happens with a ROSE Data Server, you’re pretty much out of luck since RouterOS doesn’t provide any Btrfs tools for repair or recovery. On top of that, there’s no proper backup and restore solution, which is absolutely essential for quickly getting back up and running if something goes wrong or if an important file accidentally disappears.

For MT to succeed, it must invest significantly more effort into developing a robust software solution to make the RDS2216 a viable storage server for business-critical use. But since this isn’t Mikrotik’s area of expertise, and building a storage server on 'standard' RouterOS is fundamentally flawed, the whole approach seems pointless.

To be bluntly honest, I think MT should either kill the data server product line or spin off the business into a completely separate and independent subsidiary.

The RDS2216 hardware looks great though.

Refs:

viewtopic.php?p=1129645#p1129506

viewtopic.php?p=1129645#p1129574

viewtopic.php?p=1129657#p1129601

viewtopic.php?p=1129657#p1129614

viewtopic.php?p=1129657#p1129633

Re: New exciting features for storage

When comparing the official BTRFS man page with MikroTik’s BTRFS help page, I couldn’t help but notice some more info on official docs:

- https://btrfs.readthedocs.io/en/latest/man-index.html

- https://help.mikrotik.com/docs/spaces/R ... 9711/Btrfs

While I have no real-world experience with BTRFS (other than trying it once out of curiosity), I’ve been working with Linux for over 20 years, and it seems like some essential BTRFS tools might be missing in ROSE. However, I may be mistaken, and perhaps certain functionalities are abstracted away. Even if these tools aren't needed for everyday use, their existence suggests they serve an important purpose.

Considering that the new RDS comes at a price of around $2000 - and with disks, the total cost could reach several thousand dollars - it certainly requires a fair amount of confidence to invest as an early adopter.

- https://btrfs.readthedocs.io/en/latest/man-index.html

- https://help.mikrotik.com/docs/spaces/R ... 9711/Btrfs

While I have no real-world experience with BTRFS (other than trying it once out of curiosity), I’ve been working with Linux for over 20 years, and it seems like some essential BTRFS tools might be missing in ROSE. However, I may be mistaken, and perhaps certain functionalities are abstracted away. Even if these tools aren't needed for everyday use, their existence suggests they serve an important purpose.

Considering that the new RDS comes at a price of around $2000 - and with disks, the total cost could reach several thousand dollars - it certainly requires a fair amount of confidence to invest as an early adopter.

Re: New exciting features for storage

This is a new cool toy in the block, If you could beat the reliability WAFL filesystem and have snap mirror functionality which only transfer the delta update at the block level then I can easily convince the management to upgrade at least 10 ageing filer that we have which runs 24x7x365 downside (very pricy disk if fails :) )

This product will be disruptive in the market if MT can proved this product is _reliable_ and _comparable_ to other system out there, proved us wrong as naysayers the argument of some folks here is valid and reasonable, don't take this as negative but instead use this argument to your advantage to iron out some wrinkles on the product offering, the hardware and price point of the product is reasonable in my opinion, best of luck MT

This product will be disruptive in the market if MT can proved this product is _reliable_ and _comparable_ to other system out there, proved us wrong as naysayers the argument of some folks here is valid and reasonable, don't take this as negative but instead use this argument to your advantage to iron out some wrinkles on the product offering, the hardware and price point of the product is reasonable in my opinion, best of luck MT

Re: New exciting features for storage

Well, this new ”cool toy” RDS2216 isn’t even playing the same sport as WAFL, let alone competing in 24x7x365 business-critical operations.

I’d say MikroTik is in way over its head on this one and in a different galaxy than NetApp. 😉

I’d say MikroTik is in way over its head on this one and in a different galaxy than NetApp. 😉

Re: New exciting features for storage

To me, the RDS2216 is like a CCR2216 except:

- twice the RAM

- a more diverse network port mix (fewer SFP28 ports, but 4x SFP+ and 2x copper 10G compensate)

- interesting if early storage features

- $845 cheaper list price(!)

So it's not like you're paying a premium for the storage stuff. If anything, the opposite is true.

- twice the RAM

- a more diverse network port mix (fewer SFP28 ports, but 4x SFP+ and 2x copper 10G compensate)

- interesting if early storage features

- $845 cheaper list price(!)

So it's not like you're paying a premium for the storage stuff. If anything, the opposite is true.

Re: New exciting features for storage

Hmm, I’d say the RDS2216 sits somewhere between the CCR2216 and CCR2116 in terms of both specs and price. But yeah, the RDS2216 has a pretty attractive price for that configuration, plus, like you said, you get some storage as well (though personally I wouldn’t use it as a business-critical storage solution at this point)

code

Model Price Strg-MB RAM-GB Ports ======================================================================== CCR2116-12G-4S+ $995 128 16 12xGbE, 4x10GbE SFP+ RDS2216-2XG-4S+4XS-2XQ $1950 128 32 4x25G SFP28, 2x100G QSFP28, 4xSFP+, 2x10GbE CCR2216-1G-12XS-2XQ $2795 128 16 12x25G SFP28, 2x100G QSFP28

Last edited by Larsa on Fri Feb 28, 2025 11:07 am, edited 2 times in total.

Re: New exciting features for storage

They won't call this enterprise storage for no reason let them cook this to their heart desire and let the early adopter cast the fate of this product, who knows one of these days when they are done experimenting they figured they would rather go back and focus on routing and switching againWell, this new ”cool toy” RDS2216 isn’t even playing the same sport as WAFL, let alone competing in 24x7x365 business-critical operations.

I’d say MikroTik is in way over its head on this one and in a different galaxy than NetApp. 😉

Re: New exciting features for storage

Yeah, but why waste valuable resources on this experiment? I mean, it’s not even close to offering basic functionality for the SMB market like DSM or QTS do, so IMO the whole concept is dead on arrival and just a total waste of money. Why not just call it what it is: a router with some extra storage capabilities.

Honestly, those resources would’ve been way better spent on developing the new controller or a proper next-gen network management and monitoring tool.

Honestly, those resources would’ve been way better spent on developing the new controller or a proper next-gen network management and monitoring tool.

Re: New exciting features for storage

When talking about "next-gen" things in a corporate space then any third-party network/security audit has one sure question in it - "What UTM/NGFW solution is in use and does it have HA?"Honestly, those resources would’ve been way better spent on developing the new controller or a proper next-gen network management and monitoring tool.

I rest my case about "next-gen". While customers are reading newsletters about Wifi 7 solutions from other vendors, it makes me want to rest my case about wireless networking as well. After this it is futile to try market this "server" to any of these customers...

Re: New exciting features for storage

Larsa, you are confusing this with a home NAS. It's not

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

@Normis.

This product IS DOA at this point. The RDS [Also, RDS == Remote Desktop Services -- Microsoft]. does NOT have readily available features of existing SMB home NAS... QNAP and Synoloy home NAS already has these features, and ability for User management, LDAP/AD integration...

When will there be proper disk management

LED blink to identify disks

BTRFS is dead/non-starter -- Unless using Linux mdadm for RAID..

How we monitor disk health, scrub tasks, snapshot viewing / snapshot tree / restore functions?

User management for SMB shares -- AD/LDAP integration. Or MikroTik planning to instead use the UserMan package for this?

The Unifi Dream Machines** are more capable than this RDS hardware at this point.

RDS is cool concept, but not ready for prime time.

This is the problem -- MikroTik Hardware == capable and quite good. It is always the hardware released before software is ready. We wait year+ for development to catch up.

Is MikroTik NOT taking the time to research what competition is doing, and how they're doing it?

IE: Explore Synology, QNAP [QT-OS / QTHero], Unifi storage, TrueNAS [SCALE].

This product IS DOA at this point. The RDS [Also, RDS == Remote Desktop Services -- Microsoft]. does NOT have readily available features of existing SMB home NAS... QNAP and Synoloy home NAS already has these features, and ability for User management, LDAP/AD integration...

When will there be proper disk management

LED blink to identify disks

BTRFS is dead/non-starter -- Unless using Linux mdadm for RAID..

How we monitor disk health, scrub tasks, snapshot viewing / snapshot tree / restore functions?

User management for SMB shares -- AD/LDAP integration. Or MikroTik planning to instead use the UserMan package for this?

The Unifi Dream Machines** are more capable than this RDS hardware at this point.

RDS is cool concept, but not ready for prime time.

This is the problem -- MikroTik Hardware == capable and quite good. It is always the hardware released before software is ready. We wait year+ for development to catch up.

Is MikroTik NOT taking the time to research what competition is doing, and how they're doing it?

IE: Explore Synology, QNAP [QT-OS / QTHero], Unifi storage, TrueNAS [SCALE].

Last edited by toxicfusion on Fri Feb 28, 2025 6:29 pm, edited 3 times in total.

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

We're still waiting for bug fixes in V7 for ROUTEROS....

Still waiting on proper fixes for CAPsMAN [config sync for HA].

Cambium and Unifi wiping the floor in wireless for access point management, deployment, and performance...... CAPsMAN becoming overly complex and constantly tweaking to get proper wireless performance expected out of commodity wireless chipsets.

Sure-up software quality and we will be happier and perhaps less vocal toward MikroTik with our feedback.

Still waiting on proper fixes for CAPsMAN [config sync for HA].

Cambium and Unifi wiping the floor in wireless for access point management, deployment, and performance...... CAPsMAN becoming overly complex and constantly tweaking to get proper wireless performance expected out of commodity wireless chipsets.

Sure-up software quality and we will be happier and perhaps less vocal toward MikroTik with our feedback.

Re: New exciting features for storage

Larsa, you are confusing this with a home NAS. It's not

Normis, does indeed not! 😉

Just to be clear, I'm comparing the RouterOS v7 special ROSE edition, running on the ROSE Enterprise Data Server RDS2216 ("designed for enterprise environments. Secure, scalable, and under your control"), with SMB entry-level NAS solutions like DSM or QTS. From that perspective, the special ROSE edition doesn’t even come close. Do you want me to put together a comparison table of the missing key features in RouterOS v7 special ROSE edition?

Have a nice weekend!

P.S.

According to the product page, you can still run virtual machines on the RDS2216 ("Run VMs, automate workloads...") and take advantage of "mission-critical workloads with near-instant failover and future-proof scalability as NVMe technology advances." 😁

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

Larsa, you are confusing this with a home NAS. It's not

Normis, does indeed not! 😉

Just to be clear, I'm comparing the RouterOS v7 special ROSE edition, running on the ROSE Enterprise Data Server RDS2216 ("designed for enterprise environments. Secure, scalable, and under your control"), with SMB entry-level NAS solutions like DSM or QTS. From that perspective, the special ROSE edition doesn’t even come close. Do you want me to put together a comparison table of the missing key features in RouterOS v7 special ROSE edition?

Have a nice weekend!

P.S.

According to the product page, you can still run virtual machines on the RDS2216 ("Run VMs, automate workloads...") and take advantage of "mission-critical workloads with near-instant failover and future-proof scalability as NVMe technology advances." 😁

I concur with you..... I also just posted my gripes of missing features.

This product is great concept... but "eggs in one basket" scenerio.

How can this be mission-critical? Only will be with DUAL HA-APPLIANCES! [How even work, when config sync is MISSING]

you perform maintenance on the RDS appliance, install new RouterOS release... BOOM -- entire network goes Down [Routing, and STORAGE]. This is risk with all-in-one. This is OKAY for those in small networks, home, or lab....

It is better if the RDS appliance ran a stripped DOWN version of RouterOS along-side the ROSE package.

Leave routing to a dedicated routerOS appliance.

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

If MikroTik like to evaluate and R&D how to implement ZFS into RouterOS -- talk to Jorgen Lundman of OpenZFS. He can probably help you develop ZFS for RouterOS -- MikroTik's own linux OS. Given that ZFS cannot be a kernel module on a linux Distro only part of BSD*

Re: New exciting features for storage

.

... just ingested the february news-cake ! ! ! ! !

.

... this is ... is ... a bold offer !

.

lot of people will ... both ways ...

.

and as an old MOTD-wizzard wisely said:

.

great power, comes with great responsibility

... just ingested the february news-cake ! ! ! ! !

.

... this is ... is ... a bold offer !

.

.Larsa, you are confusing this with a home NAS. It's not

lot of people will ... both ways ...

.

and as an old MOTD-wizzard wisely said:

.

great power, comes with great responsibility

Re: New exciting features for storage

... don't get me wrong *) ... I'm flashed ...

.

*) ... cause you always get me wrong

.

*) ... cause you always get me wrong

Re: New exciting features for storage

Even vanilla Btrfs RAID 1 can be a real headache, for example, if a disk intermittently disconnects or fails for some reason and then gets marked as unreliable. Restoring a Btrfs RAID 1 is a pretty complicated process and requires expert knowledge, as @Petch1 pointed out in another thread. In other words, Btrfs RAID 1 is nothing like a normal RAID 1 with automatic resync. There are plenty of examples of this online.

They have updated the docs and change the file system in the RAID examples to ext4

https://help.mikrotik.com/docs/pages/di ... ersions=91

-

-

toxicfusion

Member

- Posts: 326

- Joined:

Re: New exciting features for storage

lmfao, this cant be real -- they changed from BTRFS to ext4 , in real-time... what about the marketing for snapshots?............

Yes, ext4 mdadm is much preferred and stable over BTRFS. I'd actually like to see XFS -- but you lose snapshot ability.

Synology and QNAP have their own implementation of RAID5/6 of BTRFS -- which I doubt MikroTik has yet to research or solidify...

ZFS would be ideal here, but would take significant development to work with their own linux distro and the kernel version they're using.

In my eyes - This is a very early product, beta. Hardware was released WAY to early; software QA needs to catch up.

We still need a way to comfortably manage the disks, scrub tasks, Smart tests, blink LEDS. etc. Alot is lacking at this point. No one will want to use MikroTik CLI to configure their "enterprise storage", when it can be done BETTER and with more peace of mind from other vendors. Until MikroTik adds ease of disk management and all the needed elements into Winbox or WebFig... this is DOA.

RDS should be running its own MikroTik storageOS, with its own management interface.

Yes, ext4 mdadm is much preferred and stable over BTRFS. I'd actually like to see XFS -- but you lose snapshot ability.

Synology and QNAP have their own implementation of RAID5/6 of BTRFS -- which I doubt MikroTik has yet to research or solidify...

ZFS would be ideal here, but would take significant development to work with their own linux distro and the kernel version they're using.

In my eyes - This is a very early product, beta. Hardware was released WAY to early; software QA needs to catch up.

We still need a way to comfortably manage the disks, scrub tasks, Smart tests, blink LEDS. etc. Alot is lacking at this point. No one will want to use MikroTik CLI to configure their "enterprise storage", when it can be done BETTER and with more peace of mind from other vendors. Until MikroTik adds ease of disk management and all the needed elements into Winbox or WebFig... this is DOA.

RDS should be running its own MikroTik storageOS, with its own management interface.

Re: New exciting features for storage

I have zero interest in NASes, or in MikroTik making a NAS, let alone a NAS OS. I did, however, immediately order two RDS2216s (to start), specifically because they are routers that run RouterOS.

I think if you currently use high end MikroTik gear like the CCR2216 in your organization you are already well off the beaten path in terms of making choices nobody ever fired an IT manager for. So for folks like you, incrementally adding some storage features to your network infrastructure might enable some interesting use cases, and if not, at least you got a weird green CCR on the cheap.

MikroTik is eating the elephant one bite at a time. Hopefully in a couple of years you can do more, with a more polished experience.

But people who say that MikroTik should have chosen to spend years more, developing storage software in the dark, not shipping any hardware, know nothing about how products in general or software in particular is made.

It's equally tiring to hear capital-letter IT Professionals who would only ever use MikroTik at home chime in anyway. Please don't enumerate the ways the RDS2216 is worse than your seven-figure storage install in terms of your specific ISO 27001 compliance regimen. Literally nobody cares. You can laugh it up with your NetApp or Nutanix rep the next time you're wined and dined pre-renewal.

I think if you currently use high end MikroTik gear like the CCR2216 in your organization you are already well off the beaten path in terms of making choices nobody ever fired an IT manager for. So for folks like you, incrementally adding some storage features to your network infrastructure might enable some interesting use cases, and if not, at least you got a weird green CCR on the cheap.

MikroTik is eating the elephant one bite at a time. Hopefully in a couple of years you can do more, with a more polished experience.

But people who say that MikroTik should have chosen to spend years more, developing storage software in the dark, not shipping any hardware, know nothing about how products in general or software in particular is made.

It's equally tiring to hear capital-letter IT Professionals who would only ever use MikroTik at home chime in anyway. Please don't enumerate the ways the RDS2216 is worse than your seven-figure storage install in terms of your specific ISO 27001 compliance regimen. Literally nobody cares. You can laugh it up with your NetApp or Nutanix rep the next time you're wined and dined pre-renewal.

Re: 📣 WinBox 4 is here 📣

Now with the nice new ROSE server surprise, I decided to test the rosestorage package.

Like, when MikroTik dares to store files on RouterOS, then I can do it too right?

Well, it works great!!!

So I'm, running a 4core 3ghz PC 16gb ram, 4x4tb harddisk in raid5 + 2x 500gb ssd in raid1.

I've put a few million files on it, exact duplicate of my windows fileserver.

This works very good, I'm surprised. And it's pretty easy to setup too!

Except that there is no real way to see which files are on the disks.

The current 'Files' option in winbox tries to download the complete list of files first, then creates a treeview of it.

But with millions of files, this takes ages and I've never actually seen it finish.

In the CLI, something similar happens, you just get a dump of all the files.

I suppose you (MikroTik) experience this as well.

The obvious solution is to change this behavior into getting a list of files/dirs in the current directory first, then when you click a plus sign, it will get the list of that folder, etc. Like in a real file-explorer on macos or windows.

I hope this will become a reality in the future.

In the meantime I can just use an SMB client to view the files.

Speaking of SMB, I noticed that when you create a share with uppercase characters, there will always be a second share visible with lowercase characters only. Don't know if that is a linux thing, but for now I just changed all sharenames into lowercase.

BTW Good job on the rosestorage package and the cool green box, awesome.

Like, when MikroTik dares to store files on RouterOS, then I can do it too right?

Well, it works great!!!

So I'm, running a 4core 3ghz PC 16gb ram, 4x4tb harddisk in raid5 + 2x 500gb ssd in raid1.

I've put a few million files on it, exact duplicate of my windows fileserver.

This works very good, I'm surprised. And it's pretty easy to setup too!

Except that there is no real way to see which files are on the disks.

The current 'Files' option in winbox tries to download the complete list of files first, then creates a treeview of it.

But with millions of files, this takes ages and I've never actually seen it finish.

In the CLI, something similar happens, you just get a dump of all the files.

I suppose you (MikroTik) experience this as well.

The obvious solution is to change this behavior into getting a list of files/dirs in the current directory first, then when you click a plus sign, it will get the list of that folder, etc. Like in a real file-explorer on macos or windows.

I hope this will become a reality in the future.

In the meantime I can just use an SMB client to view the files.

Speaking of SMB, I noticed that when you create a share with uppercase characters, there will always be a second share visible with lowercase characters only. Don't know if that is a linux thing, but for now I just changed all sharenames into lowercase.

BTW Good job on the rosestorage package and the cool green box, awesome.

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: 📣 WinBox 4 is here 📣

I've mentioned it multiple times and no one cares. It will never work normally without fully changing approach to work with files.The current 'Files' option in winbox tries to download the complete list of files first, then creates a treeview of it.

But with millions of files, this takes ages and I've never actually seen it finish.

In the CLI, something similar happens, you just get a dump of all the files.

I suppose you (MikroTik) experience this as well.

The obvious solution is to change this behavior into getting a list of files/dirs in the current directory first, then when you click a plus sign, it will get the list of that folder, etc. Like in a real file-explorer on macos or windows.

I hope this will become a reality in the future.

Last edited by teslasystems on Tue Mar 04, 2025 12:10 am, edited 1 time in total.

Re: 📣 WinBox 4 is here 📣

Maybe now is the time for them to start taking this issue seriously?This is offtopic, but I've mentioned it multiple times in other topics and no one cares. It will never work normally without fully changing approach to work with files.

It makes more sense now, right?

-

-

teslasystems

Frequent Visitor

- Posts: 76

- Joined:

Re: 📣 WinBox 4 is here 📣

+Maybe now is the time for them to start taking this issue seriously?This is offtopic, but I've mentioned it multiple times in other topics and no one cares. It will never work normally without fully changing approach to work with files.

It makes more sense now, right?

Re: 📣 WinBox 4 is here 📣

n/a

Last edited by Larsa on Mon Mar 03, 2025 7:27 pm, edited 1 time in total.

Re: New exciting features for storage

Post about ROSE by sszbv moved to the right topic.

Re: New exciting features for storage

Sorry for posting it in the wrong topic, I searched for a rose topic, but couldn't easily find it.Post about ROSE by sszbv moved to the right topic.

Second best was Winbox 4 in my opinion.

I see here that others also mentioned the file tree being, well, not very useful with many files.

On cli it is sort of workable with the new path option.

Like:

/file print path=<tab>

sort of gives you the possibility to browse trough the files

Re: New exciting features for storage

+1 for your idea!I, for one, welcome our Storage overlords.

When ROSE came out, I put an NVMe to SATA adapter in a 2116, punched a hole, ran some thin SATA cables out, and hooked up six SATA drives in a bay the size of a CDROM. It works pretty well.

The advantage MikroTik has over a PC is wirespeed switching/routing (on Marvell platforms). I run a bunch of containers on my 2116. Going to have to add the Minecraft one (didn't know it existed for ARM64).

No mercy on the case, just put a hole in it!

Anyway, I think I'll try that too, just to see what happens.

I allready tried the CCR2004 PCIE card on the CCR2116 with a m2 to pcie converter :)

That works fine

Re: New exciting features for storage

Not a problem. I should have written "Moved to dedicated to ROSE topic.".Sorry for posting it in the wrong topic, I searched for a rose topic, but couldn't easily find it.Post about ROSE by sszbv moved to the right topic.

Second best was Winbox 4 in my opinion.....

Re: New exciting features for storage

At the risk of being off topic, could you describe that further? Works fine in terms of just getting power, or does it also appear as network interfaces on the CCR2116? Any details on the physical setup?I allready tried the CCR2004 PCIE card on the CCR2116 with a m2 to pcie converter :)

That works fine

Re: New exciting features for storage

It shows up in the interfaces. Just use a m2/nvme to pcie 16x slot converter from aliexpress + powersupply.At the risk of being off topic, could you describe that further? Works fine in terms of just getting power, or does it also appear as network interfaces on the CCR2116? Any details on the physical setup?I allready tried the CCR2004 PCIE card on the CCR2116 with a m2 to pcie converter :)

That works fine

Re: New exciting features for storage

I used normal raid first and then used BTRFS as a filesystem, because I thought it would be safe enough. But now that the example has changed...Even vanilla Btrfs RAID 1 can be a real headache, for example, if a disk intermittently disconnects or fails for some reason and then gets marked as unreliable. Restoring a Btrfs RAID 1 is a pretty complicated process and requires expert knowledge, as @Petch1 pointed out in another thread. In other words, Btrfs RAID 1 is nothing like a normal RAID 1 with automatic resync. There are plenty of examples of this online.

They have updated the docs and change the file system in the RAID examples to ext4

https://help.mikrotik.com/docs/pages/di ... ersions=91

Should I be worried and redo the whole system with ext4?

Re: New exciting features for storage

I wonder if MikroTik has really thought about worst-case scenarios when developing RDS and ROSE. It’s easy to assume everything will work perfectly, but reality is often more complicated. That’s why setting up a NAS on a standard Ubuntu system isn’t simple - there’s a reason why special distributions exist for this.

Also, a full Linux system offers many recovery options. I would be concerned. As an RDS admin, if I run into an unexpected Btrfs issue that MikroTik didn’t plan for, I can’t spend weeks sending *supout.rif* files back and forth with support. My data needs to stay available and online.

Also, a full Linux system offers many recovery options. I would be concerned. As an RDS admin, if I run into an unexpected Btrfs issue that MikroTik didn’t plan for, I can’t spend weeks sending *supout.rif* files back and forth with support. My data needs to stay available and online.

Re: New exciting features for storage

I'm starting to think that SWAP partition is not only used by containershttps://help.mikrotik.com/docs/spaces/R ... -Swapspace

What's new in 7.18 (2025-Feb-24 10:47):

*) disk - allow to add swap space without container package;

Sorry, it's not clear to me if I can use the SWAP partition only for containers or also with ROS.

I'm asking because I have several hAP ac2 that can't use SMB (router freezes and restarts itself when transfers are large) and containers due to low RAM.

Before changing disk partitions i would like to make sure it is useful...

Thanks

EDIT: I found the answer, Normis wrote:

Swap is not for the host system. Please read the manual about it before complaining: https://help.mikrotik.com/docs/spaces/R ... -Swapspace

I am not using containers and I don't even have the container package installed

You do not have the required permissions to view the files attached to this post.

Re: New exciting features for storage

Even Druvis mentions the SWAP can be used not only for containers: https://youtu.be/wJw50I7STck?t=131

Re: New exciting features for storage

On my RBAHx4 Dude Edition I tried setting up a swap file but it doesn't seem to work:

/disk add type=file file-path=sata-part1/swapfile file-size=500M swap=yes

/disk add type=file file-path=sata-part1/swapfile file-size=500M swap=yes

You do not have the required permissions to view the files attached to this post.

Re: New exciting features for storage