Please skip this message if you’re not interested in some kind of TLDR manual.

We start from a scenario where nothing must be done. Map device has 1 bridge with 2 interfaces, DHCP server and that’s it. Just to ensure that there are no problems on the producer and consumer devices.

Schema (clickable)

The config

/interface bridge

add arp=enabled fast-forward=no name=Cons-Br protocol-mode=none

add name=dhcp_pool0 ranges=192.168.0.10-192.168.0.20

/ip dhcp-server

add address-pool=dhcp_pool0 disabled=no interface=Cons-Br lease-time=2m name=dhcp1

/interface bridge port

add bridge=Cons-Br hw=no interface=ether1

add bridge=Cons-Br hw=no interface=ether2

/ip address

add address=192.168.0.1/24 interface=Cons-Br network=192.168.0.0

/ip dhcp-server network

add address=192.168.0.0/24 dns-none=yes gateway=192.168.0.1 netmask=24

As the next step we move ethernet ports into different bridges (clickable).

This is the place where problems start. Devices can ping each other because the other network is available via default gateway. I can open a NAS management page from computer. But the Laptop can’t discover NAS as DLNA server.

/interface bridge

add fast-forward=no name=Cons-Br protocol-mode=none

add fast-forward=no name=Prod-Br protocol-mode=none

/ip pool

add name=dhcp_pool0 ranges=192.168.0.10-192.168.0.20

add name=dhcp_pool2 ranges=192.168.1.10-192.168.1.20

/ip dhcp-server

add address-pool=dhcp_pool0 disabled=no interface=Cons-Br lease-time=2m name=dhcp1

add address-pool=dhcp_pool2 disabled=no interface=Prod-Br lease-time=2m name=dhcp2

/interface bridge port

add bridge=Cons-Br hw=no interface=ether1

add bridge=Prod-Br hw=no interface=ether2

/ip address

add address=192.168.0.1/24 interface=Cons-Br network=192.168.0.0

add address=192.168.1.1/24 interface=Prod-Br network=192.168.1.0

/ip dhcp-server network

add address=192.168.0.0/24 dns-none=yes gateway=192.168.0.1 netmask=24

add address=192.168.1.0/24 dns-none=yes gateway=192.168.1.1 netmask=24

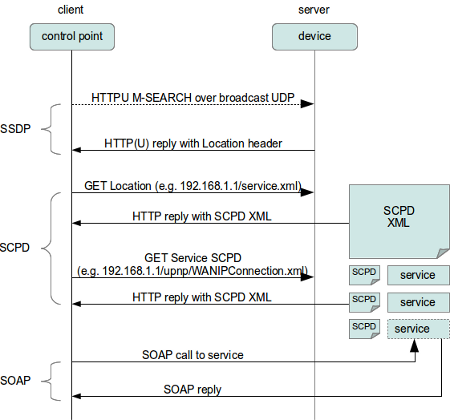

If I understood properly it is SSDP that is responsible for the discovery. Packets are always sent to UDP port 1900, but the source port may vary. There are many posts saying that SSDP packets have TTL=1 thus those are not forwarded anywhere. Ok, I think we can fix that with a mangle rule:

/ip firewall mangle

add action=change-ttl chain=prerouting disabled=yes dst-address-type=multicast \

log-prefix=TTL+ new-ttl=set:64 passthrough=yes port=1900 protocol=udp

Note that according to specification packets have to be sent with TTL=4, although some devices ignore that. I’m not sure whether higher TTL may cause issues, at least it doesn’t with my setup.

Then we need to configure PIM to ensure multicast packets are routed between bridges. Here comes the tough part. In the PIM example it says that it’s enough to add bridges into PIM interfaces and configure a static Rendezvous Point (RP). We do not need to add any alternative addresses because devices can easily reach each other. From Wiki:

You may also need to configure alternative-subnets on upstream interface - in case if the multicast sender address is in an IP subnet that is not directly reachable from the local router

The RP is recommended to be a place closer to a Producer, because the traffic is sent from Media server down to all devices that are subscribed to certain groups. In my case I use

Br-Prod 192.168.1.1 as RP. I’m not sure whether Bsr has to be defined, I haven’t yet understood its purpose.

/routing pim bsr-candidates

add interface=Cons-Br

/routing pim interface

add interface=Cons-Br

add interface=Prod-Br

/routing pim rp

add address=192.168.1.1

/routing pim rp-candidates

add interface=Prod-Br

Additional thing that is mentioned to add a static route on the Producer. DLNA uses 239.255.255.250 group. Otherwise we can specify all default multicast groups 224.0.0.0/4 so I added route to this network via IP address of the Bridge where Nas is connected. Command is executed on the Nas:

route add -net 224.0.0.0 netmask 240.0.0.0 gw 192.168.1.1 dev eth0

New route is added. Be aware that this route would exist until reboot. Check

this page to see how to do it permanently using autorun script.

route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.1.1 0.0.0.0 UG 0 0 0 eth0

127.0.0.0 0.0.0.0 255.0.0.0 U 0 0 0 lo

169.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 qvs0

192.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

224.0.0.0 192.168.1.1 240.0.0.0 UG 0 0 0 eth0

Now I need to ensure that both NAS and Laptop are sending SSDP packets to router. Add following rules. The first one would show that SSDP packet arrived the bridge interface, the second one shows packets that are forwarded between bridges.

/ip firewall filter

add action=passthrough chain=input dst-address-type=multicast dst-port=1900 \

log=yes log-prefix=in-SSDP protocol=udp

add action=passthrough chain=forward dst-address-type=multicast log=yes \

log-prefix=fw-SSDP port=1900 protocol=udp

So far so good, counters are changing, log shows that packets arrive and forward. I can see SSDP from Laptop that is sent to both bridges. The ones from laptop have smaller size, the others from Nas are bigger (clickable).

PIM results are following and look valid to my understanding.

/routing pim> bsr print

zone-type=active bsr-address=192.168.0.1 scope-zone=224.0.0.0/4 bsr-priority=1

local-address=192.168.0.1 local-priority=1 state=elected timeout=31s

zone-type=configured bsr-address=192.168.0.1 scope-zone=224.0.0.0/4

bsr-priority=1 local-address=192.168.0.1 local-priority=1 state=init

/routing pim> mrib print

Flags: X - disabled, I - inactive, D - dynamic

# DESTINATION GATEWAY METRIC INTERFACE

0 D 192.168.0.0/24 0.0.0.0 0 Cons-Br

1 D 192.168.1.0/24 0.0.0.0 0 Prod-Br

/routing pim> mfc print

GROUP SOURCE RP

239.192.152.143 192.168.1.18 192.168.1.1

239.255.255.250 192.168.0.18 192.168.1.1

239.255.255.250 192.168.1.18 192.168.1.1

/routing pim> join print

Flags: RP - (*,*,RP), WC - (*,G), SG - (S,G), SG_rpt - (S,G,rpt)

GROUP SOURCE RP

WC 224.0.0.0 192.168.1.1 192.168.1.1

SG 239.192.152.143 0.0.0.0 192.168.1.1

SG 239.255.255.250 0.0.0.0 192.168.1.1

SG_rpt 239.192.152.143 192.168.1.18 192.168.1.1

SG_rpt 239.255.255.250 192.168.0.18 192.168.1.1

SG_rpt 239.255.255.250 192.168.1.18 192.168.1.1

/routing pim> igmp-group print

Flags: v1 - IGMPv1, v2 - IGMPv2, v3 - IGMPv3,

I - include, E - exclude, F - forward, D - don't forward

INTERFACE GROUP SOURCE TIMEOUT

v2E Cons-Br 224.0.0.2 0.0.0.0 3m44s

v2E Cons-Br 224.0.0.13 0.0.0.0 3m49s

v2E Cons-Br 224.0.0.22 0.0.0.0 3m45s

v2E Cons-Br 239.255.255.250 0.0.0.0 3m46s

v2E Prod-Br 224.0.0.2 0.0.0.0 3m55s

v2E Prod-Br 224.0.0.13 0.0.0.0 3m47s

v2E Prod-Br 224.0.0.22 0.0.0.0 3m48s

v2E Prod-Br 239.192.152.143 0.0.0.0 3m51s

v2E Prod-Br 239.255.255.250 0.0.0.0 3m48s

But the whole setup doesn’t seem to be working. Device Sniffer tool from

Developer Tools for UPnP Technology allows me to check what messages does my Laptop see. The only reasonable NOTIFY message with ssdp:alive from Nas (although those are very rare). Thus something seems to be working according to

UPnP documentation. The thing is packet arrives with NT header, meaning that it is NAS who informs network about his presence. A response to M-Search should have been arrived with ST header inside NOTIFY packet.

This issue leads me to the conclusion that other bridge doesn’t receive some IGMP packets. First things first, just like with SSDP TTL, add mangle rule that would increase TTL for IGMP traffic.

chain=prerouting action=change-ttl new-ttl=set:64 passthrough=yes

protocol=igmp log=no log-prefix=""

And right after that everything starts to work properly.