some quick comments on configuring cake

Hi, one of the contributors to cake here. I'm pleased y'all are finally shipping it, but I have a few comments:

* A modern version of cake has support for the new diffserv LE codepoint. I'd dearly like support for that in mikrotik given how problematic CS1 proved to be, and it's a teeny patch.

* One feature of cake is that it runs the same whether at line rate, or with the shaper enabled, so you can get per-host/per flow fq, diffserv classification, etc. I'm very interested in learning of results when you try to run it or fq_codel at line rate, rather than shaped. fq_codel is the default on all interfaces, rather than pfifo_fast, in most linuxes today. I would really like it

if people put it through a battery of flent rrul tests or heavy iperf, and took captures, and plotted rtts, particularly on the higher end mikrotik hw. It is most useful with working BQL in the device driver.

* https://help.mikrotik.com/docs/display/ROS/Queues is missing support for the gso-splitting option. When using the shaper component, below 1gbit, gro "super"packets are automatically split up back into packets (and then interleaved with other flows), when unshaped, or above 1gbit, they are not. If you've got the cpu, split up superpackets.

* If you are natting at the router, try the nat option. This does not work with some forms of offloaded nat.

* If you have major bandwidth asymmetry on a link (greater than 10x1), try the ack-filter option on the slower part of the link. It gets to be a hugely *necessary* idea at ratios higher than that, see: https://blog.cerowrt.org/post/ack_filtering/

* It would be nice if mikrotik had some way of polling and displaying statistics from fq_codel for backlog, reschedules, drops, and marks, and from cake for the same. Exposing these statistics to more users would drive understanding of the role of packet loss (and marking) in controlling network delay. tc supports json output, multiple tools can parse that. See the enthusiasm for collecting stats over in the starlink community... I would love to see at the very least, drop stats out of the mikrotik userbase.

* When shaping dsl especially, it's very important to get the link type "framing" right, but also useful on cablemodems to set the docsis parameter. You can get hard up against the actual configured cablemodem rate in particular in this way instead of wasting 5-15%, and in the dsl case it is *impossible* to get a consistent shaped rate unless you set it right, or at least, conservatively. I mean that. Impossible to get some forms of dsl right unless you compensate.

* If you aren't going to use diffserv, use cake besteffort, to save on memory and cpu. To save on cpu further, don't use the ack-filter or nat options.

* There are a bunch of per host/per flow fq options that are dependent on your use cases for regulating traffic between ip addresses or ports.

* Use wash on ingress when you don't trust the diffserv markings from upstream. This a pretty heavy hammer, and it is preferred that y'all communicate with your customers about how you treat diffserv and let them optimize their own traffic, only remarking from 0 (best effort) to something else if you need to. There is a published guide to zoom traffic, among others. Wash on egress if you aren't following the relevant RFCs.

* Cake tries really hard to follow a bunch of mutually conflcting diffserv RFCs, and in an age where videoconferencing is very important the cake diffserv4 model is closer to how a wifi AP treats it. see: https://www.w3.org/TR/webrtc-priority/ for this underused facility in webrtc.

* Despite saying all this about diffserv it generally ranks dead last as an optimization technique verses better statistical multiplexing from FQ, and the short queues you get from an AQM.

I should stress that these are options and are optional, aside from getting shaped dsl compensation right, the cake defaults are pretty good.

Other notes:

* Telling your customers how they can have better wifi at home is useful also! In most cases the bufferbloat starts to shift to the home wifi at above 40mbit, and no matter all the contortions you've done here to manage your bandwidth to/from them better, everybody benefits from better home routers with sqm on the link and fq_codel on the wifi: https://blog.linuxplumbersconf.org/2016 ... y-3Nov.pdf

* The cake mailing list is the best place to ask questions or make feature requests: https://lists.bufferbloat.net/listinfo/cake - see also the archives there or on the related "Bloat" mailing list. Cake is the most advanced smart queue management (SQM) system, we've been able to design, as yet: https://www.bufferbloat.net/projects/ce ... anagement/ and whilst we initially targeted it at cpe and home gateways it is certainly proving useful in the middle of an ISP's network. We are very interested in feedback as to how to make it, or something like it, better for ISPs. One example (that I have NO idea how to make work on mikrotik) is here: https://github.com/rchac/LibreQoS

* There are multiple academic papers on how fq_codel and cake actually work, the best summary of most of the things we did to beat bufferbloat in linux is in the online book; https://bufferbloat-and-beyond.net/ - but feel free to hit google scholar for "bufferbloat", and the cobalt AQM.

* I'm really big on explaining the why (in addition to the how, above), at various levels, including entertaining ones like this:

https://blog.apnic.net/2020/01/22/buffe ... -over-yet/

* A modern version of cake has support for the new diffserv LE codepoint. I'd dearly like support for that in mikrotik given how problematic CS1 proved to be, and it's a teeny patch.

* One feature of cake is that it runs the same whether at line rate, or with the shaper enabled, so you can get per-host/per flow fq, diffserv classification, etc. I'm very interested in learning of results when you try to run it or fq_codel at line rate, rather than shaped. fq_codel is the default on all interfaces, rather than pfifo_fast, in most linuxes today. I would really like it

if people put it through a battery of flent rrul tests or heavy iperf, and took captures, and plotted rtts, particularly on the higher end mikrotik hw. It is most useful with working BQL in the device driver.

* https://help.mikrotik.com/docs/display/ROS/Queues is missing support for the gso-splitting option. When using the shaper component, below 1gbit, gro "super"packets are automatically split up back into packets (and then interleaved with other flows), when unshaped, or above 1gbit, they are not. If you've got the cpu, split up superpackets.

* If you are natting at the router, try the nat option. This does not work with some forms of offloaded nat.

* If you have major bandwidth asymmetry on a link (greater than 10x1), try the ack-filter option on the slower part of the link. It gets to be a hugely *necessary* idea at ratios higher than that, see: https://blog.cerowrt.org/post/ack_filtering/

* It would be nice if mikrotik had some way of polling and displaying statistics from fq_codel for backlog, reschedules, drops, and marks, and from cake for the same. Exposing these statistics to more users would drive understanding of the role of packet loss (and marking) in controlling network delay. tc supports json output, multiple tools can parse that. See the enthusiasm for collecting stats over in the starlink community... I would love to see at the very least, drop stats out of the mikrotik userbase.

* When shaping dsl especially, it's very important to get the link type "framing" right, but also useful on cablemodems to set the docsis parameter. You can get hard up against the actual configured cablemodem rate in particular in this way instead of wasting 5-15%, and in the dsl case it is *impossible* to get a consistent shaped rate unless you set it right, or at least, conservatively. I mean that. Impossible to get some forms of dsl right unless you compensate.

* If you aren't going to use diffserv, use cake besteffort, to save on memory and cpu. To save on cpu further, don't use the ack-filter or nat options.

* There are a bunch of per host/per flow fq options that are dependent on your use cases for regulating traffic between ip addresses or ports.

* Use wash on ingress when you don't trust the diffserv markings from upstream. This a pretty heavy hammer, and it is preferred that y'all communicate with your customers about how you treat diffserv and let them optimize their own traffic, only remarking from 0 (best effort) to something else if you need to. There is a published guide to zoom traffic, among others. Wash on egress if you aren't following the relevant RFCs.

* Cake tries really hard to follow a bunch of mutually conflcting diffserv RFCs, and in an age where videoconferencing is very important the cake diffserv4 model is closer to how a wifi AP treats it. see: https://www.w3.org/TR/webrtc-priority/ for this underused facility in webrtc.

* Despite saying all this about diffserv it generally ranks dead last as an optimization technique verses better statistical multiplexing from FQ, and the short queues you get from an AQM.

I should stress that these are options and are optional, aside from getting shaped dsl compensation right, the cake defaults are pretty good.

Other notes:

* Telling your customers how they can have better wifi at home is useful also! In most cases the bufferbloat starts to shift to the home wifi at above 40mbit, and no matter all the contortions you've done here to manage your bandwidth to/from them better, everybody benefits from better home routers with sqm on the link and fq_codel on the wifi: https://blog.linuxplumbersconf.org/2016 ... y-3Nov.pdf

* The cake mailing list is the best place to ask questions or make feature requests: https://lists.bufferbloat.net/listinfo/cake - see also the archives there or on the related "Bloat" mailing list. Cake is the most advanced smart queue management (SQM) system, we've been able to design, as yet: https://www.bufferbloat.net/projects/ce ... anagement/ and whilst we initially targeted it at cpe and home gateways it is certainly proving useful in the middle of an ISP's network. We are very interested in feedback as to how to make it, or something like it, better for ISPs. One example (that I have NO idea how to make work on mikrotik) is here: https://github.com/rchac/LibreQoS

* There are multiple academic papers on how fq_codel and cake actually work, the best summary of most of the things we did to beat bufferbloat in linux is in the online book; https://bufferbloat-and-beyond.net/ - but feel free to hit google scholar for "bufferbloat", and the cobalt AQM.

* I'm really big on explaining the why (in addition to the how, above), at various levels, including entertaining ones like this:

https://blog.apnic.net/2020/01/22/buffe ... -over-yet/

Last edited by dtaht on Sun Oct 10, 2021 8:57 pm, edited 8 times in total.

Re: some quick comments on configuring cake

Dave, thanks for very useful tips! Should be included as "best practice" in the ros documentation.

Re: some quick comments on configuring cake

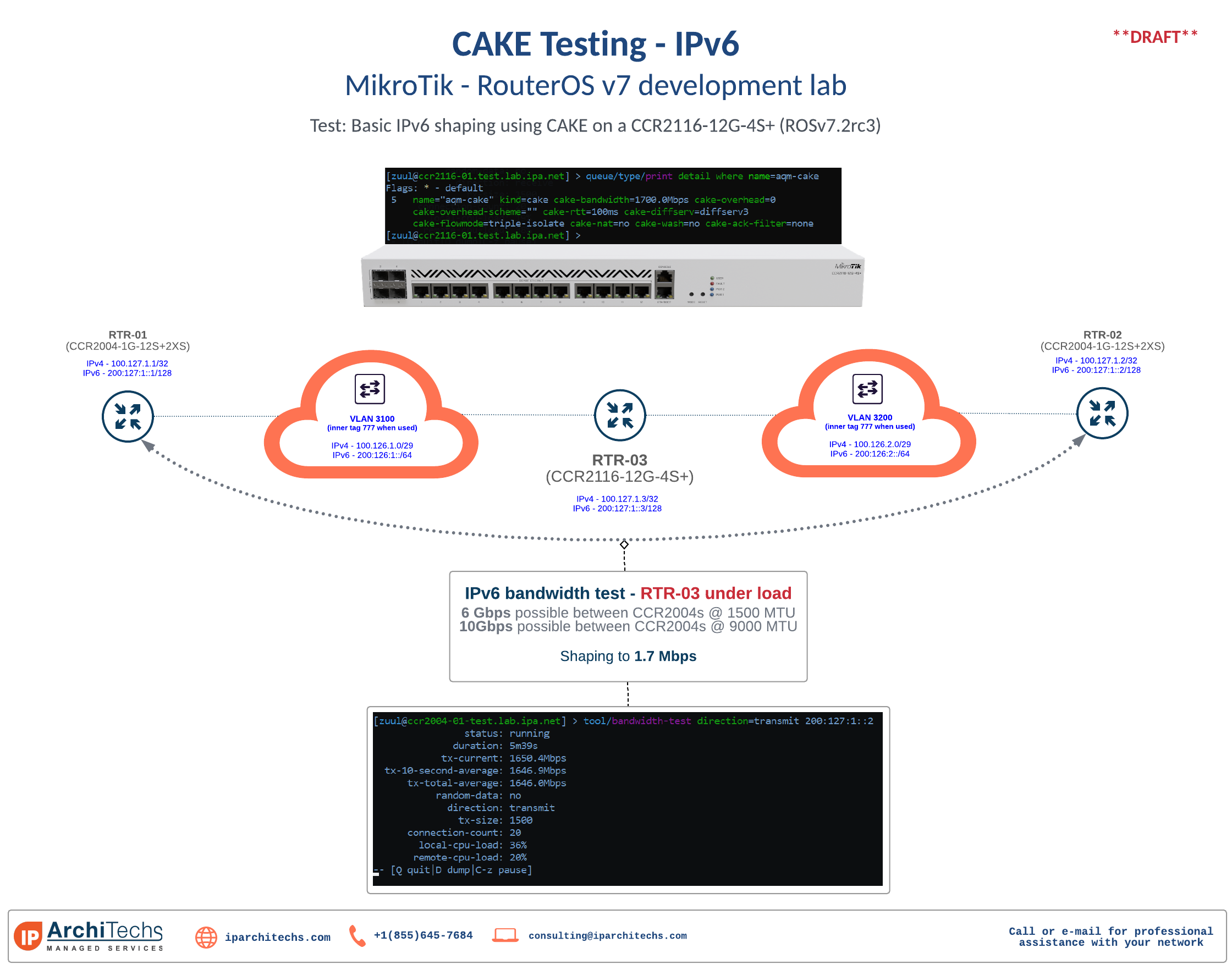

To give an example of where I'd hoped to see fq_codel or cake make more of a dent in the mikrotik universe, consider a topology like this:

10Gbit -> 1GBit port A

-> 1Gbit port B

10 more ports

In ANY fast->slow rate transition fair queuing, and aqm, can soften the impact of that 10Gbit interface (or multiple 1Gbit interfaces) fed into 1Gbit Port A here, achieving near zero latency for sparse flows and ultimately 5ms or less for incoming traffic. It's a complete unknown how deep the buffers are on those 1Gbit ports throughout the world, (or in any stepdown) but I strongly suspect they are far deeper than 5ms, and few have anything other than a FIFO on them. This recent paper was good: https://arxiv.org/pdf/2109.11693.pdf

Some offload engines for switches have gained RED of late, but that's still finicky to configure. The bulk of the bufferbloat effort has been on fixing the last mile, but we are seeing deep within the

ISP's network, signs of bloat there, also.

10Gbit -> 1GBit port A

-> 1Gbit port B

10 more ports

In ANY fast->slow rate transition fair queuing, and aqm, can soften the impact of that 10Gbit interface (or multiple 1Gbit interfaces) fed into 1Gbit Port A here, achieving near zero latency for sparse flows and ultimately 5ms or less for incoming traffic. It's a complete unknown how deep the buffers are on those 1Gbit ports throughout the world, (or in any stepdown) but I strongly suspect they are far deeper than 5ms, and few have anything other than a FIFO on them. This recent paper was good: https://arxiv.org/pdf/2109.11693.pdf

Some offload engines for switches have gained RED of late, but that's still finicky to configure. The bulk of the bufferbloat effort has been on fixing the last mile, but we are seeing deep within the

ISP's network, signs of bloat there, also.

-

-

BitHaulers

Frequent Visitor

- Posts: 59

- Joined:

Re: some quick comments on configuring cake

Do you have any tips for LTE connections? Especially ones that go from ~5Mbps to 70Mbps in a few hours? The auto ingress doesn't always act as I'd expect it to, and I'm not sure if it's RouterOS' implementation, or a bug, or me not understanding things.

Re: some quick comments on configuring cake

Hi dtaht,

thanks for posting all this usefull information. I asked already in seperate post a bit in this direction but you really provide

massive data (which half I don't yet fully understand).

But it shows that cake is a complex tool, which is worth learning more how to use it.

Don't assume all Mikrotik affinados are queue/cake experts. Please make it (if possible) simple so all can benefit a max from your

experience.

What do you mean exactly with :

The other question from Bithaulers

is also very valid, same problem again on my side. LTE (and soon 5G even worse) is a medium where in 24h the "pipe" itself changes heavily.

In this situation it is really hard to define the pipe size and do queueing with fixed values gets almost impossible.

What can CAKE do in this case?

Again thanks for the good data you provided.

thanks for posting all this usefull information. I asked already in seperate post a bit in this direction but you really provide

massive data (which half I don't yet fully understand).

But it shows that cake is a complex tool, which is worth learning more how to use it.

Don't assume all Mikrotik affinados are queue/cake experts. Please make it (if possible) simple so all can benefit a max from your

experience.

What do you mean exactly with :

This is one of my use cases where queuing is really really important. Can you give short example for say link of 5M down and 800k up (or whatever you want to use)When shaping dsl especially, it's very important to get the link type "framing" right

The other question from Bithaulers

any tips for LTE connections? Especially ones that go from ~5Mbps to 70Mbps in a few hours?

is also very valid, same problem again on my side. LTE (and soon 5G even worse) is a medium where in 24h the "pipe" itself changes heavily.

In this situation it is really hard to define the pipe size and do queueing with fixed values gets almost impossible.

What can CAKE do in this case?

Again thanks for the good data you provided.

Re: some quick comments on configuring cake

Thanks dtaht!

I wish we could upvote posts and threads. I'd do both.

I wish we could upvote posts and threads. I'd do both.

Re: some quick comments on configuring cake

Don't use them? We get the "how can an end user make LTE generally usable and consistently low latency" question a lot. It's often worse than wifi. We've (bufferbloat.net) been after that entire industry for years now to do better queue management everywhere - the handsets are horrifically overbuffered, the enode-bs as well, the backhaul's both encrypted and underprovisioned...Do you have any tips for LTE connections? Especially ones that go from ~5Mbps to 70Mbps in a few hours? The auto ingress doesn't always act as I'd expect it to, and I'm not sure if it's RouterOS' implementation, or a bug, or me not understanding things.

And instead we get back all sorts on non-useful and actually extra-latency inducing things like "network slices", and other places where they've thoroughly shot themselves in the foot (like distributed cpus for the wireless connection) from a queue theory perspective and so on. One company is afraid to even look at the packet headers inside the encapsulation, so no fq or ecn is possible by their lawyer decreed policy. There's a been a ton of good research published on how to make the queuing saner on 3/4/5g but I'm still not aware of any actual products. I am hoping that the next gen of cell phones from both apple and google get that more right (I just finished up a stint at apple, can't say more) , but as for managing the downlink...

Cake's Auto-ingress is somewhat suitable for rates that fluctuate slightly, but many/most LTE/5g systems fluctuate too much. We made cake easily and transparently reconfigurable, so with adaquate stats from the hardware, or passive measurement of flows passing through it, some answers for managing inbound are more possible... but the right (I'm trying to avoid cursing here), answer was to fq and aqm the enode-bs, improve the backhauls, and stop trying to create for-pay services that don't work.

Various moral equivalents to FQ have long been in play in the rest of the "fixed wireless" market (which consists of a lot of telco folk talking to themselves, rather than recognise that non-5g tech - like most of mikrotiks market - dominates in the field).

A possible avenue for improving LTE inbound is leveraging kathie nichol'snew queue estimator that's now in bbr, and the ebpf "pping" tool we're working on... but ENOFUNDING. If they spent a little less on the marketing and a little more on the tech - or opened up more binary blobs, we could make progress, rapidly.

Re: some quick comments on configuring cake

Various moral equivalents to FQ have long been in play in the rest of the "fixed wireless" market (which consists of a lot of telco folk talking to themselves, rather than recognise that non-5g tech - like most of mikrotiks market - dominates in the field).

Hear, hear! Much like 3GPP trying to reinvent "Internet" and related tech stacks using their own acronyms. ; -)

cake (or fq_codel) vs sfq

I thought I'd write a brief note about SFQ vs CAKE. I think highly of SFQ. If I could go back in time to 2002, when it first arrived in linux, I'd have tried to make it the default, instead of a FIFO, given what I know now. It was *the* fundamental component in wondershaper. Nearly any place you have a FIFO today, SFQ would be better, so long as you have it properly sized.

It is still very possible to get a good result with SFQ at higher rates, if you increase the packet limit, and if you have a good mix of flows, increase the number of flows. However therein lies the rub - if you increase the packet limit, you end up with 100s of ms of bufferbloat - if you don't increase the packet limit you won't be able to achieve full bandwidth at high rates - and setting a per packet limit is not as good as setting a byte limit in an age where a packet can range in size from 64 bytes to 64k bytes.

DRR is an option that can work better than SFQ. That said, if all you have is SFQ, USE IT. Anything that breaks up bursts is good.

So... 4 improvements that came from fq_codel over SFQ.

0) It does better FQ for "sparse" flows than SFQ

1) You don't need to set the queue length, the AQM attempts to hold latencies to 5ms

2) The default number of flows is 1024, which seems to be "enough"

3) fq_codel drops from the head, not the tail of the queue, signaling congestion earlier, and avoiding bursty tail loss

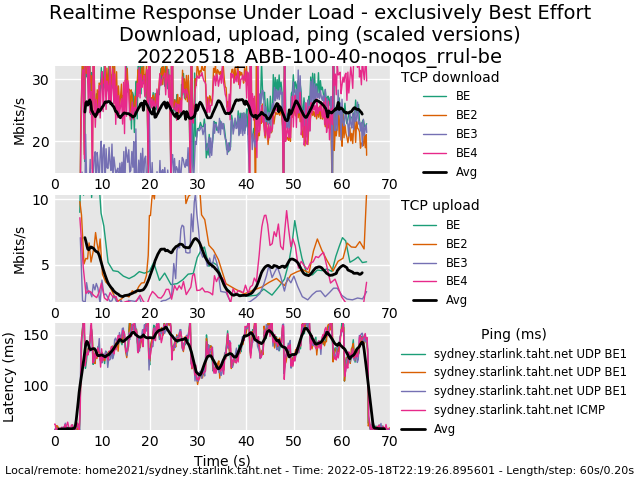

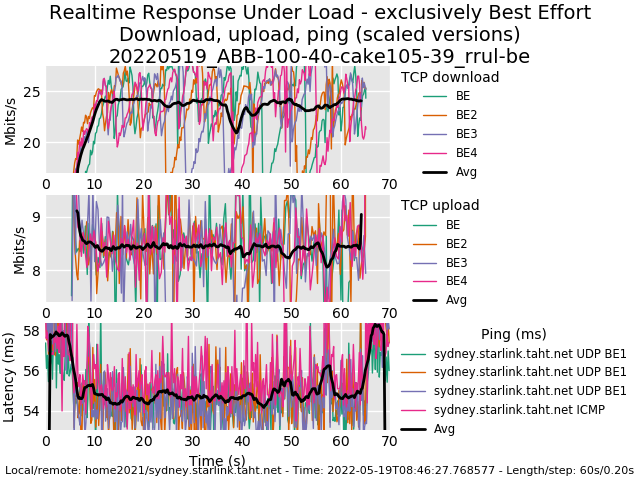

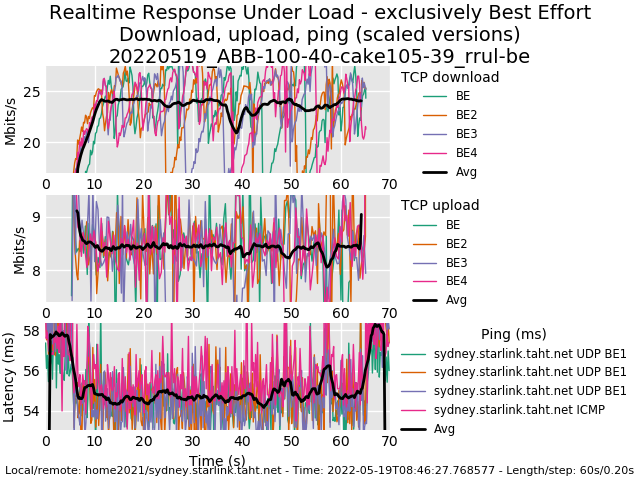

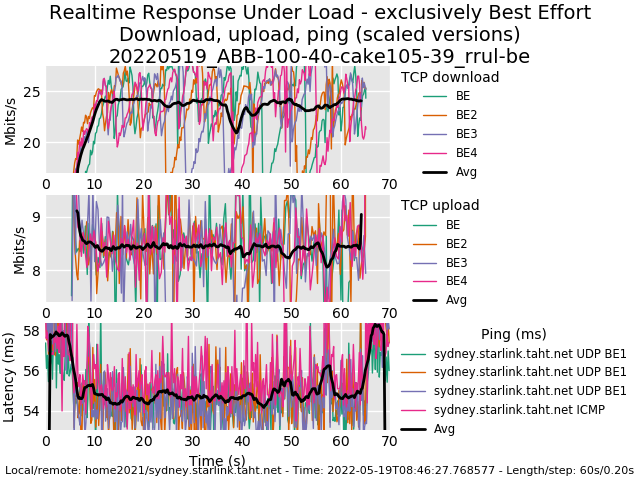

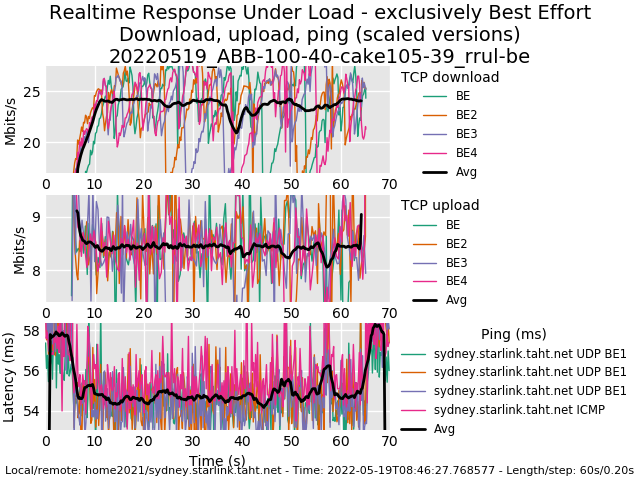

What follows are two "rrul" plots, taken from the flent.org tool we use heavily in the bufferbloat project and highly recommend over, for example, web based benchmarking tools.

They test - simultaneously - 4 tcp upload streams, 4 tcp download streams, 4 measurement streams (both udp and icmp) for 1 minute, by default, and both of these are *good* results. This particular test was against a cablemodem provisioned for 100Mbit down, 10Mbit up. I'll show what a bad result looks like at the end.

Take a look at the third panel on the bottom on both these plots. That's fq_codel's DRR++ derived scheduler, taking the measurement flows and putting them in the front of the queue. SFQ and DRR put new flows at the tail of the FQ queues - so if you have 32 flows, a new flow's arrival will end up at the 33th queue (Which is still WAY better than a FIFO), and be served in turn. A variant of SFQ, called SQF, noticed that it was possible to take a new arrival, serve that first, and thus newer flows - of all sorts, not just voip, dns, tcp syn/syn ack got a little boost and lower latency, than fatter flows. The DRR based design of the fq_codel scheduler on that third panel shows that with the 12 flows going, at this rate, we are saving 10ms on sparser packets - packets that have an arrival rate less than the total time it takes to serve all the other queue-making flows.

Now as to why the bandwidths seem a bit different - the tcp flows in the SFQ case are more jittery than the cake one because they hit the end of the SFQ's fifo, have one or more tail drops, and then have to recover more data that the codel AQM does. It turns out we deliver slightly more data in both directions in this test case.

Lastly, what does a bad result look like? Well, this is the basic behavior of a typical (spectrum) cable modem today. The latencies under load grow so bad, that it chokes the upstream flows enormously, and your voice call, well... do you like shouting 600 ft across the room to be heard? Or clicking on a web page and waiting 2 seconds for the first byte?

Best practice for fq_codel: At shaped rates below 4Mbit, you need to scale the target to the time it takes for 1MTU to egress. At 1500MTU, 1Mbit, 15ms. It generally pays to use a quantum of 300 below 100Mbit.

Cake autoscales these two parameters.

My thanks to Jordan Szuch for testing this release candidate of mikrotik on the hapac2 and providing these plots and comfort, that cake and fq_codel were actually working correctly here. SHIP IT.

(really looking forward to more testing and testers)

It is still very possible to get a good result with SFQ at higher rates, if you increase the packet limit, and if you have a good mix of flows, increase the number of flows. However therein lies the rub - if you increase the packet limit, you end up with 100s of ms of bufferbloat - if you don't increase the packet limit you won't be able to achieve full bandwidth at high rates - and setting a per packet limit is not as good as setting a byte limit in an age where a packet can range in size from 64 bytes to 64k bytes.

DRR is an option that can work better than SFQ. That said, if all you have is SFQ, USE IT. Anything that breaks up bursts is good.

So... 4 improvements that came from fq_codel over SFQ.

0) It does better FQ for "sparse" flows than SFQ

1) You don't need to set the queue length, the AQM attempts to hold latencies to 5ms

2) The default number of flows is 1024, which seems to be "enough"

3) fq_codel drops from the head, not the tail of the queue, signaling congestion earlier, and avoiding bursty tail loss

What follows are two "rrul" plots, taken from the flent.org tool we use heavily in the bufferbloat project and highly recommend over, for example, web based benchmarking tools.

They test - simultaneously - 4 tcp upload streams, 4 tcp download streams, 4 measurement streams (both udp and icmp) for 1 minute, by default, and both of these are *good* results. This particular test was against a cablemodem provisioned for 100Mbit down, 10Mbit up. I'll show what a bad result looks like at the end.

Take a look at the third panel on the bottom on both these plots. That's fq_codel's DRR++ derived scheduler, taking the measurement flows and putting them in the front of the queue. SFQ and DRR put new flows at the tail of the FQ queues - so if you have 32 flows, a new flow's arrival will end up at the 33th queue (Which is still WAY better than a FIFO), and be served in turn. A variant of SFQ, called SQF, noticed that it was possible to take a new arrival, serve that first, and thus newer flows - of all sorts, not just voip, dns, tcp syn/syn ack got a little boost and lower latency, than fatter flows. The DRR based design of the fq_codel scheduler on that third panel shows that with the 12 flows going, at this rate, we are saving 10ms on sparser packets - packets that have an arrival rate less than the total time it takes to serve all the other queue-making flows.

Now as to why the bandwidths seem a bit different - the tcp flows in the SFQ case are more jittery than the cake one because they hit the end of the SFQ's fifo, have one or more tail drops, and then have to recover more data that the codel AQM does. It turns out we deliver slightly more data in both directions in this test case.

Lastly, what does a bad result look like? Well, this is the basic behavior of a typical (spectrum) cable modem today. The latencies under load grow so bad, that it chokes the upstream flows enormously, and your voice call, well... do you like shouting 600 ft across the room to be heard? Or clicking on a web page and waiting 2 seconds for the first byte?

Best practice for fq_codel: At shaped rates below 4Mbit, you need to scale the target to the time it takes for 1MTU to egress. At 1500MTU, 1Mbit, 15ms. It generally pays to use a quantum of 300 below 100Mbit.

Cake autoscales these two parameters.

My thanks to Jordan Szuch for testing this release candidate of mikrotik on the hapac2 and providing these plots and comfort, that cake and fq_codel were actually working correctly here. SHIP IT.

(really looking forward to more testing and testers)

You do not have the required permissions to view the files attached to this post.

Last edited by dtaht on Thu Oct 14, 2021 5:38 pm, edited 4 times in total.

Re: some quick comments on configuring cake

Just a few notes on configuration cake overhead keywords (if in a hurry just read the bold snippets):

DSL:

ADSL* and max rate <= 25/5 Mbps: "overhead 44 atm"

Note: actually anything using ATM/AAL5, which nowadays for access links should be only ADSL, ADSL2, ADSL2+, but theoretically VDSL2 also allows ATM/AAL5 but I have seen no evidence yet that this configuration exsts in the real world. Note 44 Bytes is a realistic "bad case" encapsulation overhead seen in the wild, theoretically larger overhead seems possible albeit very unlikely. To dig deeper into ADSL overhead curious minds can have a look at https://github.com/moeller0/ATM_overhead_detector.

VDSL2**: "overhead 44 mpu 88"

Note: Actually PTM carrier instead of ATM/AAL%, this can actually be used on ADSL links as well, and as far as I know some ISPs actually use that.

Also note that PTM uses a 64/65 encapsulation so if you deduce the shaper settings from modem sync you need derate the syncrarts by 64/65 = 0.984615384615 (cake offers a ptm keyword to perform this derating automatically, but does so by adjusting the accounted packet size instead of simply adjusting the shaper gross rates. BUT for most users the sync will not be the relevant limit, but a shaper/policer at the ISP's end which enforces the contracted rates which if functional will already have the 64/65 overhead accounted for.)

VDSL2 likely has lower overhead than 44, but the bandwidth sacrifice of specifying a slightly larger per-packet-overhead is small compared to the latency-under-load-increase possible if the per-packet-overhead is too small.

DOCSIS/cable**: "overhead 18 mpu 88"

Note: The real per-packet/per-slot overhead on a DOCSIS link is considerably higher, but the DOCSIS standard mandates that user access rates are shapes as if they had 18 bytes of per-packet overhead, so for us that is the relevant value.

Getting initial shaper setting: The quickest way to get reasonable starting values to configure the shaper is to simply run a few speedtests and try to get a feel for the reliably available speeds for down- and up-link and then use these net goodput values (mostly measured as TCP/IP goodput) as gross shaper values for cake. Say you measured 100 arbitrary units, the respective gross rate on a DOCSIS link would be larger or equal to :

100 / ((1500-20-20)/(1500+18)) = 103.97

This will give the shaper a 100-100*100/103.97 = 3.82% margin compared to the true bottleneck rate, which is an acceptable starting point*, which then should be confirmed by a few bufferbloat tests, either via the dslreports speedtest (for configuration see https://forum.openwrt.org/t/sqm-qos-rec ... sting/2803) or waveform's new test under https://www.waveform.com/tools/bufferbloat.

https://openwrt.org/docs/guide-user/net ... qm-details while tailored for OpenWrt's SQM version, contains a lot of background information and configuration advice for those willing to spend more time.

*) The recommended margin is 5-15% of the true bottleneck gross rate, tyically a bit more for ingress/download and potentially a bit less for egress/upload, but 3.8% is close enough IFF one is willing to run a few tests to confirm that bufferbloat is sufficiently controlled, otherwise just take 95% of the speedtest result.

DSL:

ADSL* and max rate <= 25/5 Mbps: "overhead 44 atm"

Note: actually anything using ATM/AAL5, which nowadays for access links should be only ADSL, ADSL2, ADSL2+, but theoretically VDSL2 also allows ATM/AAL5 but I have seen no evidence yet that this configuration exsts in the real world. Note 44 Bytes is a realistic "bad case" encapsulation overhead seen in the wild, theoretically larger overhead seems possible albeit very unlikely. To dig deeper into ADSL overhead curious minds can have a look at https://github.com/moeller0/ATM_overhead_detector.

VDSL2**: "overhead 44 mpu 88"

Note: Actually PTM carrier instead of ATM/AAL%, this can actually be used on ADSL links as well, and as far as I know some ISPs actually use that.

Also note that PTM uses a 64/65 encapsulation so if you deduce the shaper settings from modem sync you need derate the syncrarts by 64/65 = 0.984615384615 (cake offers a ptm keyword to perform this derating automatically, but does so by adjusting the accounted packet size instead of simply adjusting the shaper gross rates. BUT for most users the sync will not be the relevant limit, but a shaper/policer at the ISP's end which enforces the contracted rates which if functional will already have the 64/65 overhead accounted for.)

VDSL2 likely has lower overhead than 44, but the bandwidth sacrifice of specifying a slightly larger per-packet-overhead is small compared to the latency-under-load-increase possible if the per-packet-overhead is too small.

DOCSIS/cable**: "overhead 18 mpu 88"

Note: The real per-packet/per-slot overhead on a DOCSIS link is considerably higher, but the DOCSIS standard mandates that user access rates are shapes as if they had 18 bytes of per-packet overhead, so for us that is the relevant value.

Getting initial shaper setting: The quickest way to get reasonable starting values to configure the shaper is to simply run a few speedtests and try to get a feel for the reliably available speeds for down- and up-link and then use these net goodput values (mostly measured as TCP/IP goodput) as gross shaper values for cake. Say you measured 100 arbitrary units, the respective gross rate on a DOCSIS link would be larger or equal to :

100 / ((1500-20-20)/(1500+18)) = 103.97

This will give the shaper a 100-100*100/103.97 = 3.82% margin compared to the true bottleneck rate, which is an acceptable starting point*, which then should be confirmed by a few bufferbloat tests, either via the dslreports speedtest (for configuration see https://forum.openwrt.org/t/sqm-qos-rec ... sting/2803) or waveform's new test under https://www.waveform.com/tools/bufferbloat.

https://openwrt.org/docs/guide-user/net ... qm-details while tailored for OpenWrt's SQM version, contains a lot of background information and configuration advice for those willing to spend more time.

*) The recommended margin is 5-15% of the true bottleneck gross rate, tyically a bit more for ingress/download and potentially a bit less for egress/upload, but 3.8% is close enough IFF one is willing to run a few tests to confirm that bufferbloat is sufficiently controlled, otherwise just take 95% of the speedtest result.

Cake's bandwidth parameter

We try to stress that the default options for cake (essentially just the bandwidth parameter) are good enough for most purposes.

That said, there are two important differences between how cake's bandwidth shaper works vis a vis htb that are useful to highlight.

Token bucket designs date back to the 70s as an easy to implement in hardware method of doing rate control. Linux HTB along the way (2006) gained the ability to compensate for dsl as cake does, but I don't know if it's configurable in mikrotik's api. Also, our thinking is flavored by the CPE -> perspective, rather than the ISP -> down, and my hope is in working with more active ISPs trying to shape their down more directly we'll find ideas worth implementing moving forward.

The more important difference between htb and cake's shaper is that a token bucket is naturally bursty. If a link has lain idle for a while, enough tokens accumulate (the htb quantum and burst parameters) that a line rate burst will pass through htb until the burst parameter is exceeded.

This means that that burst ends up accumulating in the device buffers and invokes jitter. The deficit based shaper in cake never bursts, but does need a cpu that can context switch rapidly enough to ensure a smoother delivery of packets. You can typically run cake hard up against a htb shaper, configured at the same rate, and have cake almost always win. And you can typically configure

htb with a higher burst and quantum parameter to have it use less cpu and still more or less effectively shape the connection - but it too starts getting wildly variable as you tweak those parameters to save on cpu to be able to run at higher rates.

One thing that we've failed to call out enough is doing things like saying "if you have enough cpu". How we think about that over here is a bit different from how others think about it, in that what matters is not clock rate, or straight line instructions per second, but how fast the cpu can context switch. It's often the case that a heavily pipelined cpu cannot context switch as fast as one that isn't.

Running out of cpu when shaping using either method is a PITA. Per-cpu locking is also a problem. You might peg one cpu at 100% and leave the others idle. The linux community has worked very hard to remove a bunch of locks over the years, but at the moment the most progress is being made via ebf assistance, as in libreqos and preseem. YMMV.

I see that a common means of testing mikrotik is with X tc filters (seemingly 25). Cake can work with those also, but the hope was that less tc rules would be needed with cake as a base, and some of the cpu lost, or even all of it, to using cake, recovered that way. In general we try to encourage folk to drop all their preconceptions about shaping, multiple tiers of service, and so on, and delete everything they are doing special, try cake bandwidth X, and then measure their results. I'd like a look at an ISPs typical tc rule set to see how tc is being used today.

As for multiple tiers of service - A common configuration is three tiers of htb -> SFQ, SFQ, SFQ. I've seen 6, 9, even as many as 20, and the thing commonly missed by assembling the qdiscs this way

is that every separate qdisc you add has a packet limit, each! adds to your worst case delay. You can typically drop in htb + fq_codel in those configurations and keep your worst case delay bounded better via the aqm, or apply cake which has 3 or 4 tiers of service internally.

That said, there are two important differences between how cake's bandwidth shaper works vis a vis htb that are useful to highlight.

Token bucket designs date back to the 70s as an easy to implement in hardware method of doing rate control. Linux HTB along the way (2006) gained the ability to compensate for dsl as cake does, but I don't know if it's configurable in mikrotik's api. Also, our thinking is flavored by the CPE -> perspective, rather than the ISP -> down, and my hope is in working with more active ISPs trying to shape their down more directly we'll find ideas worth implementing moving forward.

The more important difference between htb and cake's shaper is that a token bucket is naturally bursty. If a link has lain idle for a while, enough tokens accumulate (the htb quantum and burst parameters) that a line rate burst will pass through htb until the burst parameter is exceeded.

This means that that burst ends up accumulating in the device buffers and invokes jitter. The deficit based shaper in cake never bursts, but does need a cpu that can context switch rapidly enough to ensure a smoother delivery of packets. You can typically run cake hard up against a htb shaper, configured at the same rate, and have cake almost always win. And you can typically configure

htb with a higher burst and quantum parameter to have it use less cpu and still more or less effectively shape the connection - but it too starts getting wildly variable as you tweak those parameters to save on cpu to be able to run at higher rates.

One thing that we've failed to call out enough is doing things like saying "if you have enough cpu". How we think about that over here is a bit different from how others think about it, in that what matters is not clock rate, or straight line instructions per second, but how fast the cpu can context switch. It's often the case that a heavily pipelined cpu cannot context switch as fast as one that isn't.

Running out of cpu when shaping using either method is a PITA. Per-cpu locking is also a problem. You might peg one cpu at 100% and leave the others idle. The linux community has worked very hard to remove a bunch of locks over the years, but at the moment the most progress is being made via ebf assistance, as in libreqos and preseem. YMMV.

I see that a common means of testing mikrotik is with X tc filters (seemingly 25). Cake can work with those also, but the hope was that less tc rules would be needed with cake as a base, and some of the cpu lost, or even all of it, to using cake, recovered that way. In general we try to encourage folk to drop all their preconceptions about shaping, multiple tiers of service, and so on, and delete everything they are doing special, try cake bandwidth X, and then measure their results. I'd like a look at an ISPs typical tc rule set to see how tc is being used today.

As for multiple tiers of service - A common configuration is three tiers of htb -> SFQ, SFQ, SFQ. I've seen 6, 9, even as many as 20, and the thing commonly missed by assembling the qdiscs this way

is that every separate qdisc you add has a packet limit, each! adds to your worst case delay. You can typically drop in htb + fq_codel in those configurations and keep your worst case delay bounded better via the aqm, or apply cake which has 3 or 4 tiers of service internally.

Re: some quick comments on configuring cake

Some poetry and analysis from Jim Gettys: https://gettys.wordpress.com/2018/02/11 ... -elephant/

Re: some quick comments on configuring cake

This patch makes cake work better with a locally terminated VPN: https://lists.bufferbloat.net/pipermail ... 05257.html

Re: some quick comments on configuring cake

Hi Dave (dtaht),

Firstly, it's a testament to Mikrotik to see key developers such as yourself posting in the forums; secondly, your fine work on CAKE and related has made a global contribution to virtually everyone using the internet, so hats off!

> A modern version of cake has support for the new diffserv LE codepoint. I'd dearly like support for that in mikrotik given how problematic CS1 proved to be, and it's a teeny patch

+1! Would be great if you could submit a request at https://help.mikrotik.com so it is formalised.

> It would be nice if mikrotik had some way of polling and displaying statistics from fq_codel

I agree. As a first step, Mikrotik could fix the queue counters, for example enabling CAKE for all WiFi outbound queues on RouterOS 7 (/queue type set wireless-default cake-diffserv=diffserv4 cake-flowmode=dual-dsthost kind=cake), we always see:

/queue monitor

queued-packets: 0

queued-bytes: 0

As of RouterOS 7.1rc5, 'cake-bandwidth' is still a required parameter for LTE interfaces - do you agree there is still benefit using CAKE AQM without bandwidth limits? Mikrotik may be unaware of the opportunity.

Thanks,

Dan

Firstly, it's a testament to Mikrotik to see key developers such as yourself posting in the forums; secondly, your fine work on CAKE and related has made a global contribution to virtually everyone using the internet, so hats off!

> A modern version of cake has support for the new diffserv LE codepoint. I'd dearly like support for that in mikrotik given how problematic CS1 proved to be, and it's a teeny patch

+1! Would be great if you could submit a request at https://help.mikrotik.com so it is formalised.

> It would be nice if mikrotik had some way of polling and displaying statistics from fq_codel

I agree. As a first step, Mikrotik could fix the queue counters, for example enabling CAKE for all WiFi outbound queues on RouterOS 7 (/queue type set wireless-default cake-diffserv=diffserv4 cake-flowmode=dual-dsthost kind=cake), we always see:

/queue monitor

queued-packets: 0

queued-bytes: 0

As of RouterOS 7.1rc5, 'cake-bandwidth' is still a required parameter for LTE interfaces - do you agree there is still benefit using CAKE AQM without bandwidth limits? Mikrotik may be unaware of the opportunity.

Thanks,

Dan

Re: some quick comments on configuring cake

Good catch. The bandwidth parameter should be optional for cake.

As for whether or not you can run an LTE interface at line rate wisely, the state of most of the linux drivers for that were terribly overbuffered, so the amount of backpressure you got was very late. I hope that something like AQL or BQL land for LTE interfaces, and there's some promising work towards actively sensing LTE bandwidth going on over here in the openwrt universe: https://forum.openwrt.org/t/cake-w-adap ... 108848/482

Similarly, the fq_codel for wifi stuff was only supported for 5 chipsets, any place where they can use that, instead of a shaper, is a win. ( https://lwn.net/Articles/705884/ ) . As for slamming a shaper like cake in front of it, my understanding of mikrotik's market is it's mostly ISPs, and that ISPs sell tiers of service, and in that case a cake would be good.

As for the noqueue, offloads tend to suck up all the packets so you don't see them. If their queue command could be improved to use the tc -s qdisc statistics - and show loss, backlog, and ecn statistics, that would be nice. The wifi statistics for same are buried under /sys/kernel/debug/iee*/phy*/aqm and a few other aqm files per station further below there.

Some of my personal backstory is that I was a WISP operator in Nicaragua, and I'd upgraded my backbone to wireless-n, only to have it fail (up to 30 seconds of latency) in rain, which in Nicaragua is 2+ months long. So my motivation early on in the bufferbloat effort was to fix fixed wireless, and then go back to my mountaintop there, surf and swim and so on, as soon as the fixes went into linux mainline. My attempts to retire keep being thwarted, the last time I tried to hang it up, Nicaragua had had a near-revolution, and it seemed simpler and safer to just go about fixing the whole internet for everyone... and to try and get on top of new deployments like this one to make sure they get it right...

I miss that mountain a lot, sometimes. Seeing comcast get it right was a high ( https://arxiv.org/abs/2107.13968 ), seeing starlink get it wrong ( https://www.youtube.com/watch?v=c9gLo6Xrwgw ), wasn't. And I can no longer afford to retire. But it looks like mikrotik is well on their way to getting it right, which is a high.

As for whether or not you can run an LTE interface at line rate wisely, the state of most of the linux drivers for that were terribly overbuffered, so the amount of backpressure you got was very late. I hope that something like AQL or BQL land for LTE interfaces, and there's some promising work towards actively sensing LTE bandwidth going on over here in the openwrt universe: https://forum.openwrt.org/t/cake-w-adap ... 108848/482

Similarly, the fq_codel for wifi stuff was only supported for 5 chipsets, any place where they can use that, instead of a shaper, is a win. ( https://lwn.net/Articles/705884/ ) . As for slamming a shaper like cake in front of it, my understanding of mikrotik's market is it's mostly ISPs, and that ISPs sell tiers of service, and in that case a cake would be good.

As for the noqueue, offloads tend to suck up all the packets so you don't see them. If their queue command could be improved to use the tc -s qdisc statistics - and show loss, backlog, and ecn statistics, that would be nice. The wifi statistics for same are buried under /sys/kernel/debug/iee*/phy*/aqm and a few other aqm files per station further below there.

Some of my personal backstory is that I was a WISP operator in Nicaragua, and I'd upgraded my backbone to wireless-n, only to have it fail (up to 30 seconds of latency) in rain, which in Nicaragua is 2+ months long. So my motivation early on in the bufferbloat effort was to fix fixed wireless, and then go back to my mountaintop there, surf and swim and so on, as soon as the fixes went into linux mainline. My attempts to retire keep being thwarted, the last time I tried to hang it up, Nicaragua had had a near-revolution, and it seemed simpler and safer to just go about fixing the whole internet for everyone... and to try and get on top of new deployments like this one to make sure they get it right...

I miss that mountain a lot, sometimes. Seeing comcast get it right was a high ( https://arxiv.org/abs/2107.13968 ), seeing starlink get it wrong ( https://www.youtube.com/watch?v=c9gLo6Xrwgw ), wasn't. And I can no longer afford to retire. But it looks like mikrotik is well on their way to getting it right, which is a high.

Last edited by dtaht on Sun Nov 14, 2021 8:03 pm, edited 1 time in total.

Re: some quick comments on configuring cake

I have a question about priority QoS in cake. Our customers IP packets are encapsulated in PPPoE frames, which are then encapsulated in ethernet frames (VPLS tunnel), which then have two MPLS labels placed on them, which then have a VLAN header attached as the outermost layer. Is cake capable of reading the DSCP from the IP packet with all of those layers of encapsulation?

Currently the VLAN header on the outside has the proper VLAN priority set for the priority that we want the packet to be treated, but I don't think cake can read VLAN priority (PCP)?

Some background is, this is a WISP situation where the backhaul link is carrying about 90% VPLS traffic with MPLS labels (traffic for around 400 customers), and the VLAN tag has the priority set to the priority that I want the packet to be treated. We have eight different priority classes, depending on customer class (retail vs enterprise) and type of traffic, and use HTB to put the packet into the correct queue based on the VLAN priority over this backhaul. Currently we find only fifo and red useful for this on RouterOS v6, but with both we start to hit a limit at around 850Mbps of the 1Gbps backhaul link where it starts to drop retail packets even though it hasn't been maxed out. I'm hoping that maybe in RouterOS v7 one of codel or fq_codel or cake would work for this to achieve close to the maximum 1Gbps.

I tried using sfq on RouterOS v6 for this backhaul but performance substantially decreased, likely because the sfq handler got confused by the MPLS labels and put all MPLS traffic (90% of the current traffic) into the same stream.

I haven't found a lot of info online about people queuing packets with MPLS labels with codel/fq_codel/cake

Currently the VLAN header on the outside has the proper VLAN priority set for the priority that we want the packet to be treated, but I don't think cake can read VLAN priority (PCP)?

Some background is, this is a WISP situation where the backhaul link is carrying about 90% VPLS traffic with MPLS labels (traffic for around 400 customers), and the VLAN tag has the priority set to the priority that I want the packet to be treated. We have eight different priority classes, depending on customer class (retail vs enterprise) and type of traffic, and use HTB to put the packet into the correct queue based on the VLAN priority over this backhaul. Currently we find only fifo and red useful for this on RouterOS v6, but with both we start to hit a limit at around 850Mbps of the 1Gbps backhaul link where it starts to drop retail packets even though it hasn't been maxed out. I'm hoping that maybe in RouterOS v7 one of codel or fq_codel or cake would work for this to achieve close to the maximum 1Gbps.

I tried using sfq on RouterOS v6 for this backhaul but performance substantially decreased, likely because the sfq handler got confused by the MPLS labels and put all MPLS traffic (90% of the current traffic) into the same stream.

I haven't found a lot of info online about people queuing packets with MPLS labels with codel/fq_codel/cake

Re: some quick comments on configuring cake

As for whether or not you can run an LTE interface at line rate wisely, the state of most of the linux drivers for that were terribly overbuffered, so the amount of backpressure you got was very late. I hope that something like AQL or BQL land for LTE interfaces, and there's some promising work towards actively sensing LTE bandwidth going on over here in the openwrt universe: https://forum.openwrt.org/t/cake-w-adap ... 108848/482

From what I gather they perform testing using shell scripts and icmp (ping). Is there a more robust method that has that logic built in the the device driver itself? I'm very keen to make this work on all types of wireless technologies that suffer from a high degree of fluctuations in throughput, and in my particular case especially for LTE and it's friends.

EDIT:

Both IEEE 802.11 and LTE (RAN) have plenty of real time performance indicators that should fit fine for tuning purposes.

Re: some quick comments on configuring cake

re - mpls. I have no idea if the linux flow dissector is good enough to get that far into the packet to do any good there. (I can look). It can cope with ppp-oe. If it can't find "flows", since there is seemingly no way to get at statistics in microtik, you would end up with a single queue, no matter how varied your traffic was, which you could see with (in fq_codel or sfq) tc -s class show. Otherwise you could try to hit it with a bunch of packets from different flows and see if they come out the other side in the same order.

In such a case where fq proves impossible, I might try the pie or codel AQM by themselves to keep queue sizes down. You can certainly use cake in this way as well (flowblind option), and possibly get some differentiation of service via diffserv, but it would be cheaper cpuwise to try htb + aqm.

In such a case where fq proves impossible, I might try the pie or codel AQM by themselves to keep queue sizes down. You can certainly use cake in this way as well (flowblind option), and possibly get some differentiation of service via diffserv, but it would be cheaper cpuwise to try htb + aqm.

Re: some quick comments on configuring cake

one of the things discussed on that openwrt thread was using a tcptrace-like tool, and elsewhere, deeply inspecting tcp rtt inflation with ebpf and one of kathie nichol's innovations, pping.

Some info here: https://lists.bufferbloat.net/pipermail ... 15772.html

however microtik is far, far behind the curve on ebpf support. The tcptrace-like tool I call wtbb but it's not under heavy development, lacking funding. I do regard lte's terrible, terrible queuing problems as a high priority, but apparently few in the 5g world claiming low latency is actually investigating or fixing queuing delay via any means. And I hate working for free, and would rather fix wifi.

Some info here: https://lists.bufferbloat.net/pipermail ... 15772.html

however microtik is far, far behind the curve on ebpf support. The tcptrace-like tool I call wtbb but it's not under heavy development, lacking funding. I do regard lte's terrible, terrible queuing problems as a high priority, but apparently few in the 5g world claiming low latency is actually investigating or fixing queuing delay via any means. And I hate working for free, and would rather fix wifi.

Re: some quick comments on configuring cake

in direct answer to your question, I don't know of any linux mainline device drivers that do anything clever with lte, like bql or aql. Most of these drivers are out of tree, and I do hope somewhere in some OS, for android or for ios, there's intelligent life down there. One of these days someone will do a study of actual queuing latency in common cellphones.... apple has a new tool (the command line version is called networkQuality, the ios version is under developer settings).

What I have long done is measure the worst case latency under load for my lte connection on my boat, and shape cake to that. I don't care about bandwidth, I care that my videoconfernces work well. I experimeted with a string of podcasts gradually decaying my cake parameters to see what it really looked like to end users - with predictably awful results in the last couple ones I did. The next string of podcasts will have something like that openwrt script on them.

What I have long done is measure the worst case latency under load for my lte connection on my boat, and shape cake to that. I don't care about bandwidth, I care that my videoconfernces work well. I experimeted with a string of podcasts gradually decaying my cake parameters to see what it really looked like to end users - with predictably awful results in the last couple ones I did. The next string of podcasts will have something like that openwrt script on them.

Re: some quick comments on configuring cake

And re-re-reading this question (wow, did my eyes glaze over), cake pays no attention to vlan priorites. It can, with a tc rule. Assuming it's a modern enough cake. asking your question of the cake mailing list might get you somewhere...

Re: some quick comments on configuring cake

I noticed one of the RTT schemes is "satellite"... We sometimes use high speed, but high latency (500ms) GEO point-to-point IP links (10-100Mb/s SCPC)... Historically "TCP acceleration" is the approach to deal with these reliable but high RTT links for normal "web traffic" (i.e. some variant of "split TCP" using pepsal/SCPS-TS, sometimes using Hybla[-like] CC). In our case, the sat link has a fixed RTT and fixed/known, non-shared bandwidth – which is why I think CAKE may be of some use. Since we typically route sat links into Mikrotik ROS, CAKE be easy to apply in v7.1.

But I'm curious on your thoughts if "TCP acceleration" is even needed if a CAKE queue is used on either end of [a high RTT, high BW] bridged L2 satellite link?

Since the TCP CC algorithm/config employed by actual clients can dramatically effect TCP performance with high RTT, it's just not that easy to just simulate in a lab (e.g. apple's TCP stack responds differently than Linux, same for Windows, etc., and then also differently across those OS version since TCP CC flavors change) - thus curious what your experience is with CAKE in satellite use cases.

But I'm curious on your thoughts if "TCP acceleration" is even needed if a CAKE queue is used on either end of [a high RTT, high BW] bridged L2 satellite link?

Since the TCP CC algorithm/config employed by actual clients can dramatically effect TCP performance with high RTT, it's just not that easy to just simulate in a lab (e.g. apple's TCP stack responds differently than Linux, same for Windows, etc., and then also differently across those OS version since TCP CC flavors change) - thus curious what your experience is with CAKE in satellite use cases.

Cake to GEO

If you have a correct estimate of RTT across the satellite link, use rtt that_number + 60ms. Definitely do not use the default rtt estimate (100ms) here as it will not fill the link. "satellite" is a SWAG.

cake supports RFC3168 - style ecn - if you enable that on your endpoints you can do congestion control losslessly. Win. The FQ portion will keep lower rate request/response and voip protocols separate from the AQM, and (nearly) never drop those.

https://www.bufferbloat.net/projects/ce ... nable_ECN/ [1]

There are a bunch of other ways to go with a "tcp accellerator" depending on your topology. If you are using a tcp proxy, enabling ecn on those endpoints will control the amount of data in flight. Using a delay sensitive tcp, also.

I would like very much a flent "rrul" test from an actual real-world satellite link, with and/or without a proxy. I have plenty from starlink, nothing from GEO, would love to emulate the other new constellations coming up. some packet captures too!

[1] Apple has made it more difficult to use ECN of late. The additional sysctl required to re-enable ecn negotiation always is

sudo sysctl -w net.inet.tcp.disable_tcp_heuristics=1

See also:

https://github.com/apple-opensource/xnu ... che.c#L164

This disables mptcp and tfo also.

Your core question "are proxies even needed", I didn't answer. Please go measure.

cake supports RFC3168 - style ecn - if you enable that on your endpoints you can do congestion control losslessly. Win. The FQ portion will keep lower rate request/response and voip protocols separate from the AQM, and (nearly) never drop those.

https://www.bufferbloat.net/projects/ce ... nable_ECN/ [1]

There are a bunch of other ways to go with a "tcp accellerator" depending on your topology. If you are using a tcp proxy, enabling ecn on those endpoints will control the amount of data in flight. Using a delay sensitive tcp, also.

I would like very much a flent "rrul" test from an actual real-world satellite link, with and/or without a proxy. I have plenty from starlink, nothing from GEO, would love to emulate the other new constellations coming up. some packet captures too!

[1] Apple has made it more difficult to use ECN of late. The additional sysctl required to re-enable ecn negotiation always is

sudo sysctl -w net.inet.tcp.disable_tcp_heuristics=1

See also:

https://github.com/apple-opensource/xnu ... che.c#L164

This disables mptcp and tfo also.

Your core question "are proxies even needed", I didn't answer. Please go measure.

Re: some quick comments on configuring cake

Good evening, and thank you Dave for your many years of work along with the rest of your team combating bufferbloat! I have been following along for many years, and still feel like I know so little.

I am so glad to finally have fq_codel and cake in Mikrotik! Previously I had run an OpenBSD router at home for many years and it was great, but I have been running Mikrotik for a few years now. Anyhow, on to my testing..

## INFO

## Mikrotik CCR-1009

## RouterOS 7.1 Stable

## AT&T VDSL2 100/20

## San Antonio, Tx.

## Results of a ping to test server with unloaded pipe for reference:

--- dallas.starlink.taht.net ping statistics ---

27 packets transmitted, 27 received, 0% packet loss, time 26035ms

rtt min/avg/max/mdev = 28.288/28.699/29.860/0.314 ms

#### Test 1 - No queue

#### Test 2 - CAKE defaults

name="cake-default" kind=cake cake-bandwidth=0bps cake-overhead=0 cake-overhead-scheme="" cake-rtt=100ms

cake-diffserv=diffserv3 cake-flowmode=triple-isolate cake-nat=no cake-wash=no cake-ack-filter=none

#### Test 3 - Cake with NAT on download/upload and ACK filter on upload

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=0 cake-overhead-scheme="" cake-rtt=100ms

cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=0 cake-overhead-scheme="" cake-rtt=100ms

cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=none

#### Test 4 - Adding bridged ptm

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=none

#### Test 5 - Adding wash on download

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=yes cake-ack-filter=none

#### Test 6 - Remove bridged ptm, and set overhead to 22 (same as bridged ptm) and also add MPU 44 (would not let me save that with bridged ptm selected)

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-mpu=44 cake-atm=ptm cake-overhead-scheme=""

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-mpu=44 cake-atm=ptm cake-overhead-scheme=""

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=yes cake-ack-filter=none

Admittedly, AT&T is doing a pretty darn good job as of late. Bufferbloat used to be much worse with this same setup, I know they have pushed out several firmware updates over the years to this modem. It is especially heads and shoulders better than my old cable modem with Spectrum. I was lucky to fight through that horror to finally learn that it had a Puma 6 chipset which was known after a period of time to actually introduce latency to varying degrees at random! *GRR*

Anyhow, I can't leave well enough alone and why leave my buckets up to them to control, so here I am! Also, I have noticed there has not been much testing that I could find so figured I would help! Next week, I am supposed to get my new 5009 router so that will free up this one for more lab style testing. I have a CRS309 (10gb switch), CRS326 (1gb with 10gb uplinks) here so even though it would be local.. maybe I can help by doing some testing as you mentioned about like 10gb -> 1gb, etc.

Let me know how I can help, and I look forward to your feedback on my results. P.S. - thank you in advance for letting me use your server I had done some testing against mine in Dallas, but was worried that it didn't have enough CPU to generate the traffic needed?

I had done some testing against mine in Dallas, but was worried that it didn't have enough CPU to generate the traffic needed?

One more edit... here is my results from waveform's test after the last config:

https://www.waveform.com/tools/bufferbl ... 99892a2b21

I am so glad to finally have fq_codel and cake in Mikrotik! Previously I had run an OpenBSD router at home for many years and it was great, but I have been running Mikrotik for a few years now. Anyhow, on to my testing..

## INFO

## Mikrotik CCR-1009

## RouterOS 7.1 Stable

## AT&T VDSL2 100/20

## San Antonio, Tx.

## Results of a ping to test server with unloaded pipe for reference:

--- dallas.starlink.taht.net ping statistics ---

27 packets transmitted, 27 received, 0% packet loss, time 26035ms

rtt min/avg/max/mdev = 28.288/28.699/29.860/0.314 ms

#### Test 1 - No queue

#### Test 2 - CAKE defaults

name="cake-default" kind=cake cake-bandwidth=0bps cake-overhead=0 cake-overhead-scheme="" cake-rtt=100ms

cake-diffserv=diffserv3 cake-flowmode=triple-isolate cake-nat=no cake-wash=no cake-ack-filter=none

#### Test 3 - Cake with NAT on download/upload and ACK filter on upload

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=0 cake-overhead-scheme="" cake-rtt=100ms

cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=0 cake-overhead-scheme="" cake-rtt=100ms

cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=none

#### Test 4 - Adding bridged ptm

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=none

#### Test 5 - Adding wash on download

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-atm=ptm cake-overhead-scheme=bridged-ptm

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=yes cake-ack-filter=none

#### Test 6 - Remove bridged ptm, and set overhead to 22 (same as bridged ptm) and also add MPU 44 (would not let me save that with bridged ptm selected)

name="cake-up" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-mpu=44 cake-atm=ptm cake-overhead-scheme=""

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=no cake-ack-filter=filter

name="cake-down" kind=cake cake-bandwidth=0bps cake-overhead=22 cake-mpu=44 cake-atm=ptm cake-overhead-scheme=""

cake-rtt=100ms cake-diffserv=diffserv4 cake-flowmode=triple-isolate cake-nat=yes cake-wash=yes cake-ack-filter=none

Admittedly, AT&T is doing a pretty darn good job as of late. Bufferbloat used to be much worse with this same setup, I know they have pushed out several firmware updates over the years to this modem. It is especially heads and shoulders better than my old cable modem with Spectrum. I was lucky to fight through that horror to finally learn that it had a Puma 6 chipset which was known after a period of time to actually introduce latency to varying degrees at random! *GRR*

Anyhow, I can't leave well enough alone and why leave my buckets up to them to control, so here I am! Also, I have noticed there has not been much testing that I could find so figured I would help! Next week, I am supposed to get my new 5009 router so that will free up this one for more lab style testing. I have a CRS309 (10gb switch), CRS326 (1gb with 10gb uplinks) here so even though it would be local.. maybe I can help by doing some testing as you mentioned about like 10gb -> 1gb, etc.

Let me know how I can help, and I look forward to your feedback on my results. P.S. - thank you in advance for letting me use your server

One more edit... here is my results from waveform's test after the last config:

https://www.waveform.com/tools/bufferbl ... 99892a2b21

You do not have the required permissions to view the files attached to this post.

Last edited by kevinb361 on Fri Dec 10, 2021 7:13 am, edited 5 times in total.

Re: some quick comments on configuring cake

Thx so much for testing. I have a low standard right now... "does it crash?", so far, so good.

Your first result, sans cake, was really quite good, and indicates your AT&T link has only about 20ms of buffering in it, or so. Believe it or not, that's actually "underbuffered" by prior standards, and makes it harder for a single flow to sustain full rate. But: a little underbuffering is totally fine by me, and I don't care all that much if a single flow is unable to achieve full rate, I'd rather have low latency.

It's easier to determine the buffer depth via a single upload test like this:

flent -x --step-size=.05 --socket-stats -t the_options_you_are_testing --te=upload_streams=1 -H the_closest_server tcp_nup

Use the gui to print the "tcp_rtt" stats. If you use the -t option to name your different runs, you can also do comparison plots via "add other data files" in flent-gui.

there are servers in atlanta and in fremont, california, if either of those would be closer for you.

Your first result, sans cake, was really quite good, and indicates your AT&T link has only about 20ms of buffering in it, or so. Believe it or not, that's actually "underbuffered" by prior standards, and makes it harder for a single flow to sustain full rate. But: a little underbuffering is totally fine by me, and I don't care all that much if a single flow is unable to achieve full rate, I'd rather have low latency.

It's easier to determine the buffer depth via a single upload test like this:

flent -x --step-size=.05 --socket-stats -t the_options_you_are_testing --te=upload_streams=1 -H the_closest_server tcp_nup

Use the gui to print the "tcp_rtt" stats. If you use the -t option to name your different runs, you can also do comparison plots via "add other data files" in flent-gui.

there are servers in atlanta and in fremont, california, if either of those would be closer for you.

Last edited by dtaht on Sat Dec 11, 2021 10:05 pm, edited 2 times in total.

Re: some quick comments on configuring cake

OK, ok, I gave in, in order to do science, could you also try a tcp_nup with upload_streams=4? and =16?

The Test 1 *appears* to show an old issue raising it's head - tcp global synchronization - the amount of queue is so short that all the flows synchronize and drop simultaneously, as per panel 3 of your first plot, but in order to do "science" here, simplifying the test to just uploads would help.

Secondly it appears that something on the path is treating the CS1 codepoint as higher priority than the CS0 codepoint, when CS1 is supposed to be "background".

The Test 1 *appears* to show an old issue raising it's head - tcp global synchronization - the amount of queue is so short that all the flows synchronize and drop simultaneously, as per panel 3 of your first plot, but in order to do "science" here, simplifying the test to just uploads would help.

Secondly it appears that something on the path is treating the CS1 codepoint as higher priority than the CS0 codepoint, when CS1 is supposed to be "background".

Re: some quick comments on configuring cake

Does that VDSL device do hardware flow control? Or are you shaping via cake via htb? (I'm happy to hear the bandwidth=0 parameter seems to be working otherwise?), but the only way I can think of you getting results this good is if the vdsl modem is exerting flow control....

Anyway, your last result is a clear win over what you had before, methinks. I'd like a tcp_nup test of that config too, when you find the time.

Anyway, your last result is a clear win over what you had before, methinks. I'd like a tcp_nup test of that config too, when you find the time.

Re: some quick comments on configuring cake

No crashing, I have run the CCR1009 very heavy for several days without issue! Full transparency, tonight I am on the RB5009, it just showed up yesterday so I have been toying with it. So, I will be using it for my testing tonight. I can always swap around if you would like. Either way, they are both running 7.1 Stable.Thx so much for testing. I have a low standard right now... "does it crash?", so far, so good.

Your first result, sans cake, was really quite good, and indicates your AT&T link has only about 20ms of buffering in it, or so. Believe it or not, that's actually "underbuffered" by prior standards, and makes it harder for a single flow to sustain full rate. But: a little underbuffering is totally fine by me, and I don't care all that much if a single flow is unable to achieve full rate, I'd rather have low latency.

It's easier to determine the buffer depth via a single upload test like this:

flent -x --step-size=.05 --socket-stats -t the_options_you_are_testing --te=upload_streams=1 -H the_closest_server tcp_nup

Use the gui to print the "tcp_rtt" stats. If you use the -t option to name your different runs, you can also do comparison plots via "add other data files" in flent-gui.

there are servers in atlanta and in fremont, california, if either of those would be closer for you.

I agree with your statement on under buffering and would also much prefer lower latency than a single stream achieving full rate.

Yes sir, I am in San Antonio so server is ~30ms from me. Here is the result with the test requested, sans queueing:

You do not have the required permissions to view the files attached to this post.

Re: some quick comments on configuring cake

HAH, I was hoping to pique your interestOK, ok, I gave in, in order to do science, could you also try a tcp_nup with upload_streams=4? and =16?

The Test 1 *appears* to show an old issue raising it's head - tcp global synchronization - the amount of queue is so short that all the flows synchronize and drop simultaneously, as per panel 3 of your first plot, but in order to do "science" here, simplifying the test to just uploads would help.

Secondly it appears that something on the path is treating the CS1 codepoint as higher priority than the CS0 codepoint, when CS1 is supposed to be "background".

I just thought of something that is very annoying about this modem/"router" from ATT. I have it in 'bypass' mode so that it assigns the public IP to the router however, it is still NAT'd traffic for lack of better words. I am not sure how it actually works, but it still has it's own state table, etc. The FIOS guys have figured out a way to bypass it because they also have an ONT, etc. But since this is DSL, I am stuck with whatever they are doing inside the black box. Maybe this is what is causing the codepoint funny business, as I am not doing anything with DSCP, etc.

On to the data!

You do not have the required permissions to view the files attached to this post.

Re: some quick comments on configuring cake

I am not sure if the VDSL device does or not to be honest. It is an ATT branded box model BGW210. I have it in passthrough mode, but as stated above it is still some black magic NAT but 'passes' the public IP to my router.Does that VDSL device do hardware flow control? Or are you shaping via cake via htb? (I'm happy to hear the bandwidth=0 parameter seems to be working otherwise?), but the only way I can think of you getting results this good is if the vdsl modem is exerting flow control....

Anyway, your last result is a clear win over what you had before, methinks. I'd like a tcp_nup test of that config too, when you find the time.

The very first test I posted was without any queue in the Mikrotik router. After that, was all with cake, and when using bandwidth=0 it deffinently works well! Obviously, tweaking that helps it out but for a general setup out of the box.

** NOTE ** I just realized I had made a mistake in my config. I was leaving bandwidth at 0 in the cake config, and was setting up the target max limit for upload under the simple queue general settings to 19M. However, no limiting on the download. I will need to try these tests again later setting that to unlimited and setting bandwidth within cake itself. Curious to see if that makes any difference.

Setting up that last config with tcp_nup results:

You do not have the required permissions to view the files attached to this post.

Re: some quick comments on configuring cake

OK.

0) Still mostly very happy it doesn't crash.

1) Your dsl device's buffer is sized in packets, not bytes. The reason we only saw a 20ms RTT before on the rrul test, vs a vs the tcp-nup test being so much larger RTT, is that the acks from the return flows on the path filled up the queue also. I leave it as an exercise now for the reader to calculate the packet buffer length on this device...

2) I figured I was either looking a shaper above cake, or at dsl flow control .(I like hw flow ontrol, btw, I was perpetually showing off an ancient dsl modem with a 4 packet buffer and hw flowcontrol + fq-codel in the early days, as FQ = the time based AQM vs a fifo worked with that beautifully and cost 99% less cpu to do that way. Sadly most dsl modems moved to a switch and don't provide that backpressure anymore. Not quite sure you just tested that without a shaper.

3) Do want to verify you are not using BBR on your client? The 5ms simultaneous drops are still a mite puzzling.

0) Still mostly very happy it doesn't crash.

1) Your dsl device's buffer is sized in packets, not bytes. The reason we only saw a 20ms RTT before on the rrul test, vs a vs the tcp-nup test being so much larger RTT, is that the acks from the return flows on the path filled up the queue also. I leave it as an exercise now for the reader to calculate the packet buffer length on this device...